📝 26 Jan 2025

Freshly Baked: Here’s how we Blink the LED with Rust Standard Library on Apache NuttX RTOS…

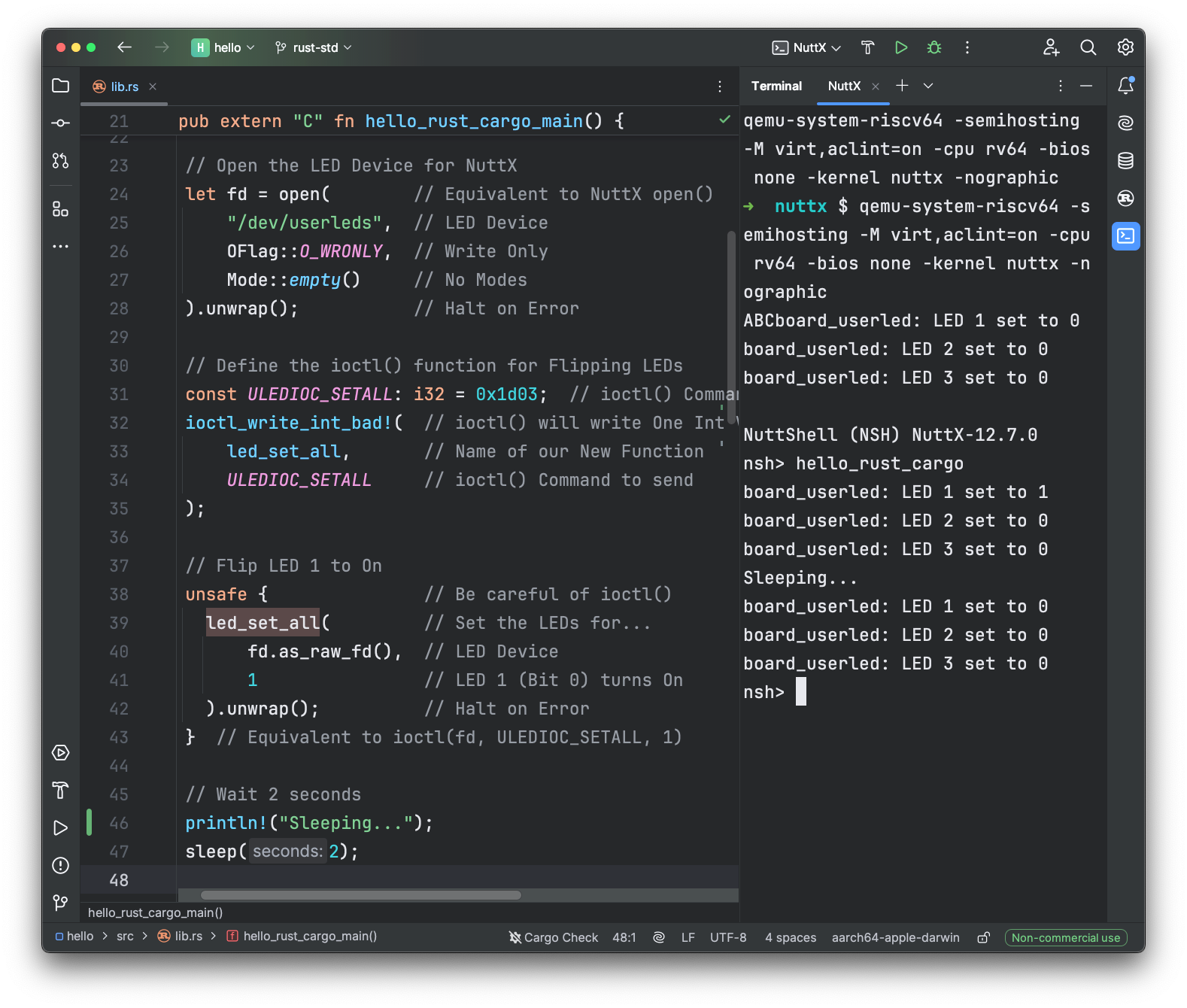

// Open the LED Device for NuttX

let fd = open( // Equivalent to NuttX open()

"/dev/userleds", // LED Device

OFlag::O_WRONLY, // Write Only

Mode::empty() // No Modes

).unwrap(); // Halt on Error

// Define the ioctl() function for Flipping LEDs

const ULEDIOC_SETALL: i32 = 0x1d03; // ioctl() Command

ioctl_write_int_bad!( // ioctl() will write One Int Value (LED Bit State)

led_set_all, // Name of our New Function

ULEDIOC_SETALL // ioctl() Command to send

);

// Flip LED 1 to On

unsafe { // Be careful of ioctl()

led_set_all( // Set the LEDs for...

fd.as_raw_fd(), // LED Device

1 // LED 1 (Bit 0) turns On

).unwrap(); // Halt on Error

} // Equivalent to ioctl(fd, ULEDIOC_SETALL, 1)

// Flip LED 1 to Off: ioctl(fd, ULEDIOC_SETALL, 0)

unsafe { led_set_all(fd.as_raw_fd(), 0).unwrap(); }Which requires the nix Rust Crate / Library…

## Add the `nix` Rust Crate

## To our NuttX Rust App

$ cd apps/examples/rust/hello

$ cargo add nix --features fs,ioctl

Updating crates.io index

Adding nix v0.29.0 to dependencies

Features: + fs + ioctl(OK it’s more complicated. Stay tuned)

All this is now possible, thanks to the awesome work by Huang Qi! 🎉

In today’s article, we explain…

How to build NuttX + Rust Standard Library

Handling JSON with the Serde Crate

Async Functions with the Tokio Crate

Blinking LEDs with the Nix Crate

How we ported Nix to NuttX

Why Nix? Rustix might be better

Why File Descriptors are “Owned” in Rust

How to build NuttX + Rust Standard Library?

Follow the instructions here…

Then run the (thoroughly revamped) Rust Hello App with QEMU RISC-V Emulator…

## Start NuttX on QEMU RISC-V 64-bit

$ qemu-system-riscv64 \

-semihosting \

-M virt,aclint=on \

-cpu rv64 \

-bios none \

-kernel nuttx \

-nographic

## Run the Rust Hello App

NuttShell (NSH) NuttX-12.8.0

nsh> hello_rust_cargo

{"name":"John","age":30}

{"name":"Jane","age":25}

Deserialized: Alice is 28 years old

Pretty JSON:

{

"name": "Alice",

"age": 28

}

Hello world from tokio!Some bits are a little wonky (but will get better)

Supports Arm and RISC-V architectures (32-bit and 64-bit)

Works on Rust Nightly Toolchain (not Rust Stable)

Needs a tiny patch to Local Toolchain (pal/unix/fs.rs)

Sorry no RISC-V Floating Point and no Kernel Build

What’s inside the brand new Rust Hello App? We dive in…

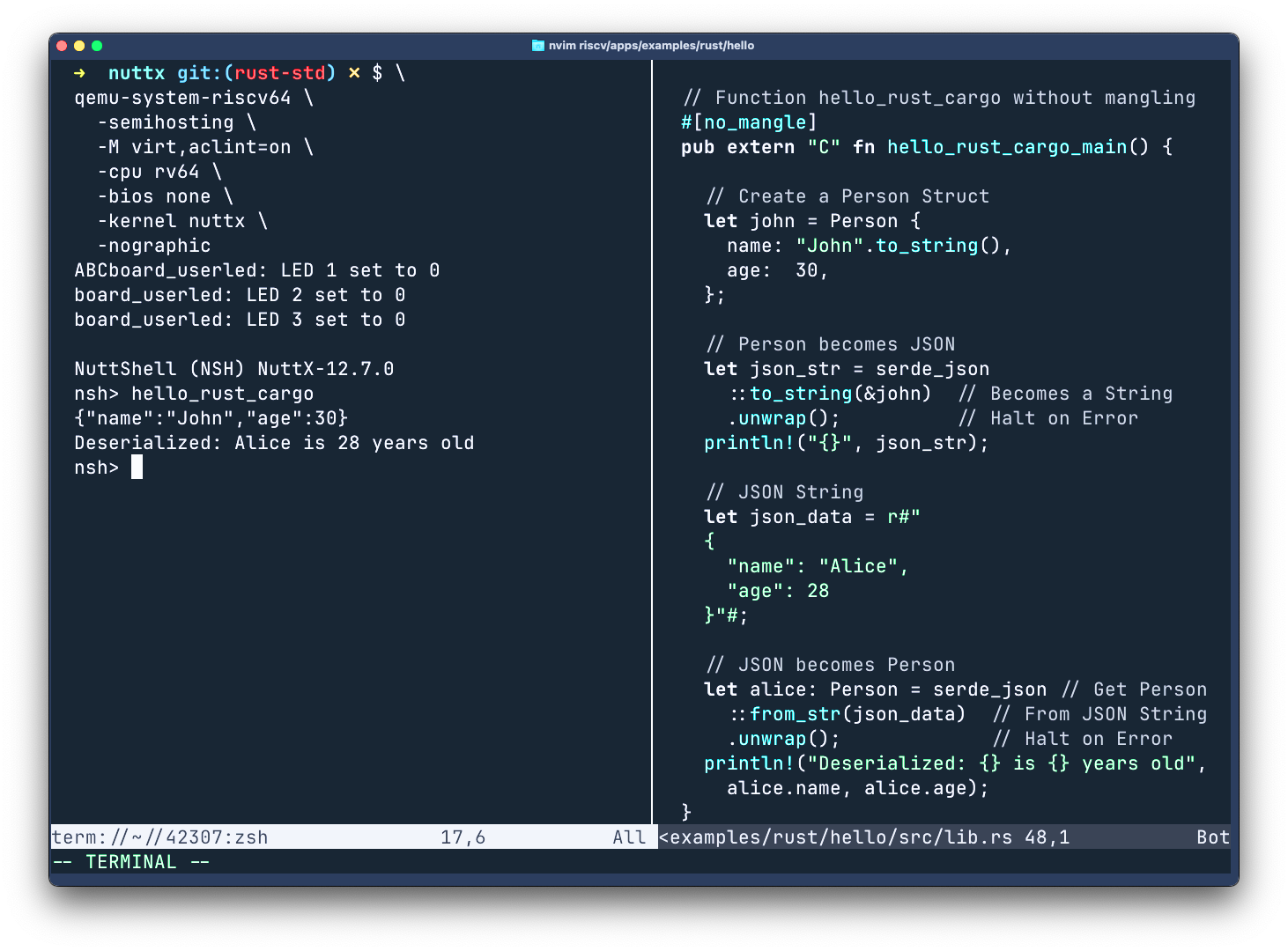

What’s this Serde?

Think “Serialize-Deserialize”. Serde is a Rust Crate / Library for Serializing and Deserializing our Data Structures. Works with JSON, CBOR, MessagePack, …

This is how we Serialize to JSON in our Hello Rust App: nuttx-apps/lib.rs

// Allow Serde to Serialize and Deserialize a Person Struct

#[derive(Serialize, Deserialize)]

struct Person {

name: String, // Containing a Name (string)

age: u8, // And Age (uint8_t)

} // Note: Rust Strings live in Heap Memory!

// Main Function of our Hello Rust App

#[no_mangle]

pub extern "C" fn hello_rust_cargo_main() {

// Create a Person Struct

let john = Person {

name: "John".to_string(),

age: 30,

};

// Serialize our Person Struct

let json_str = serde_json // Person Struct

::to_string(&john) // Becomes a String

.unwrap(); // Halt on Error

println!("{}", json_str);Which will print…

NuttShell (NSH) NuttX-12.8.0

nsh> hello_rust_cargo

{"name":"John","age":30}Now we Deserialize from JSON: lib.rs

// Declare a String with JSON inside

let json_data = r#"

{

"name": "Alice",

"age": 28

}"#;

// Deserialize our JSON String

// Into a Person Struct

let alice: Person = serde_json // Get Person Struct

::from_str(json_data) // From JSON String

.unwrap(); // Halt on Error

println!("Deserialized: {} is {} years old",

alice.name, alice.age);And we’ll see…

Deserialized: Alice is 28 years oldSerde will also do JSON Formatting: lib.rs

// Serialize our Person Struct

// But neatly please

let pretty_json_str = serde_json // Person Struct

::to_string_pretty(&alice) // Becomes a Formatted String

.unwrap(); // Halt on Error

println!("Pretty JSON:\n{}", pretty_json_str);Looks much neater…

Pretty JSON:

{

"name": "Alice",

"age": 28

}(Serde runs on Rust Core Library, though super messy)

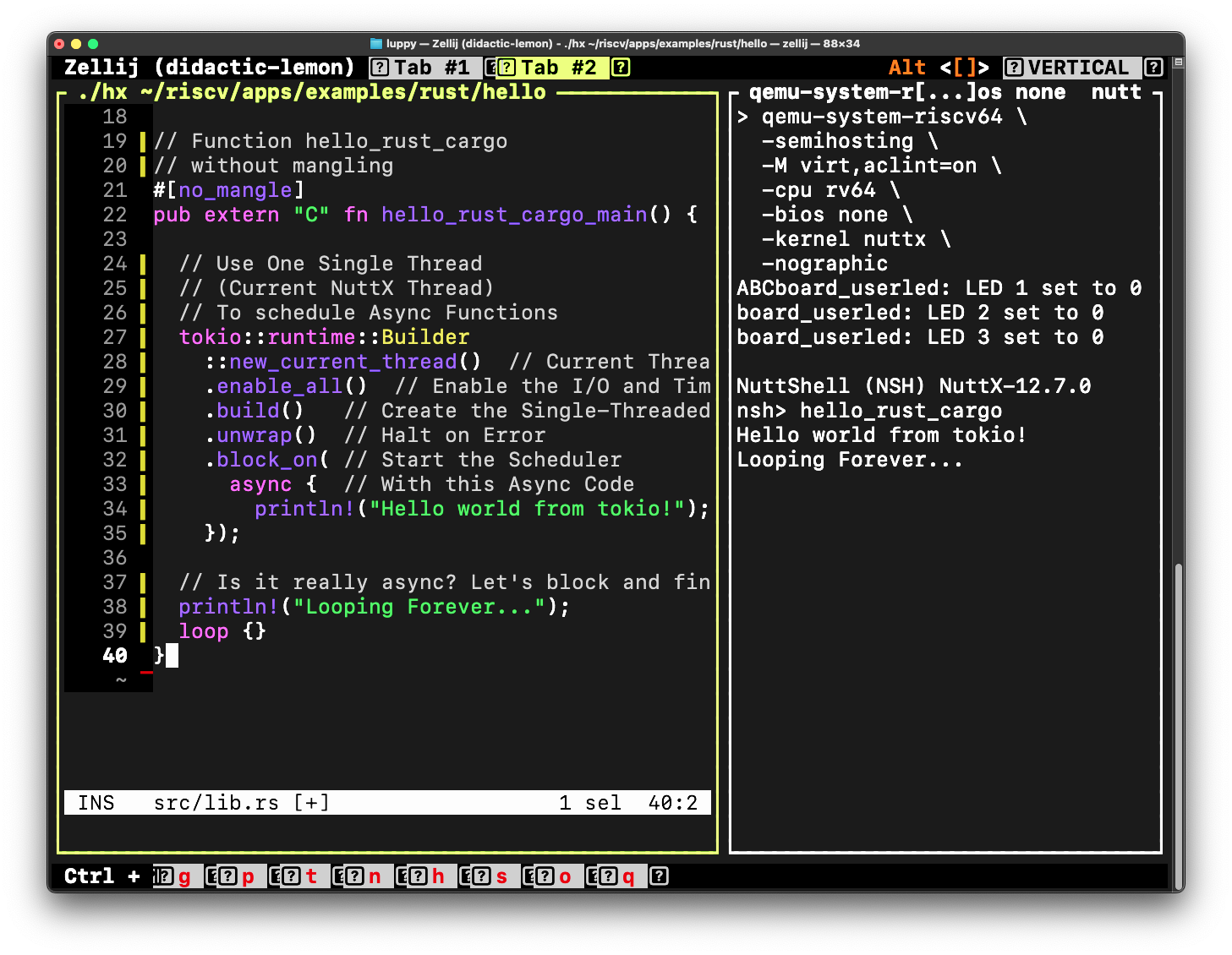

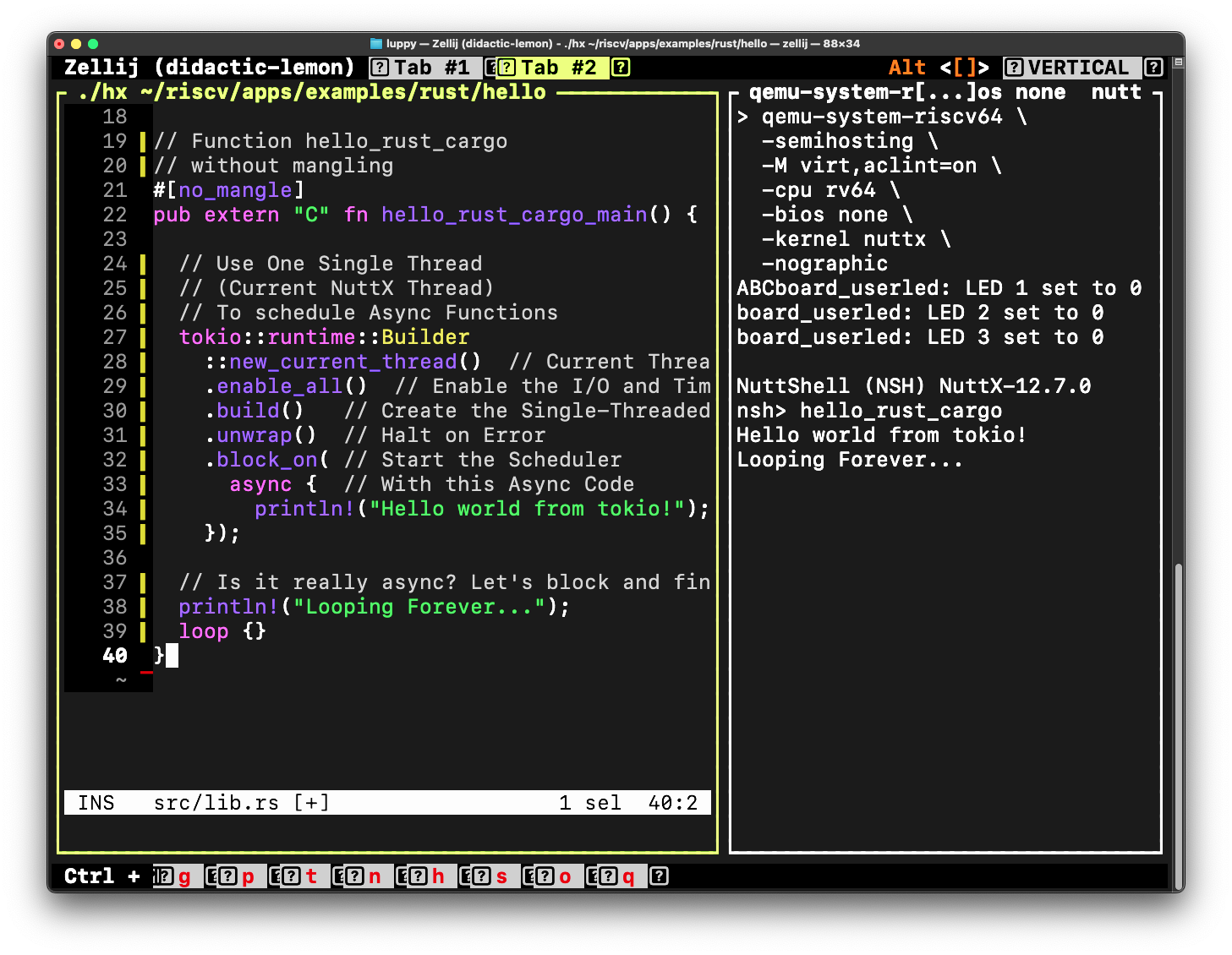

What’s this Tokio? Sounds like a city?

Indeed, “Tokio” is inspired by Tokyo (and Metal I/O)

Tokio … provides a runtime and functions that enable the use of Asynchronous I/O, allowing for Concurrency in regards to Task Completion

Inside our Rust Hello App, here’s how we run Async Functions with Tokio: nuttx-apps/lib.rs

// Use One Single Thread (Current Thread)

// To schedule Async Functions

tokio::runtime::Builder

::new_current_thread() // Current Thread is the Single-Threaded Scheduler

.enable_all() // Enable the I/O and Time Functions

.build() // Create the Single-Threaded Scheduler

.unwrap() // Halt on Error

.block_on( // Start the Scheduler

async { // With this Async Code

println!("Hello world from tokio!");

});

// Is it really async? Let's block and find out!

println!("Looping Forever...");

loop {}We’ll see…

nsh> hello_rust_cargo

Hello world from tokio!

Looping Forever...Yawn. Tokio looks underwhelming?

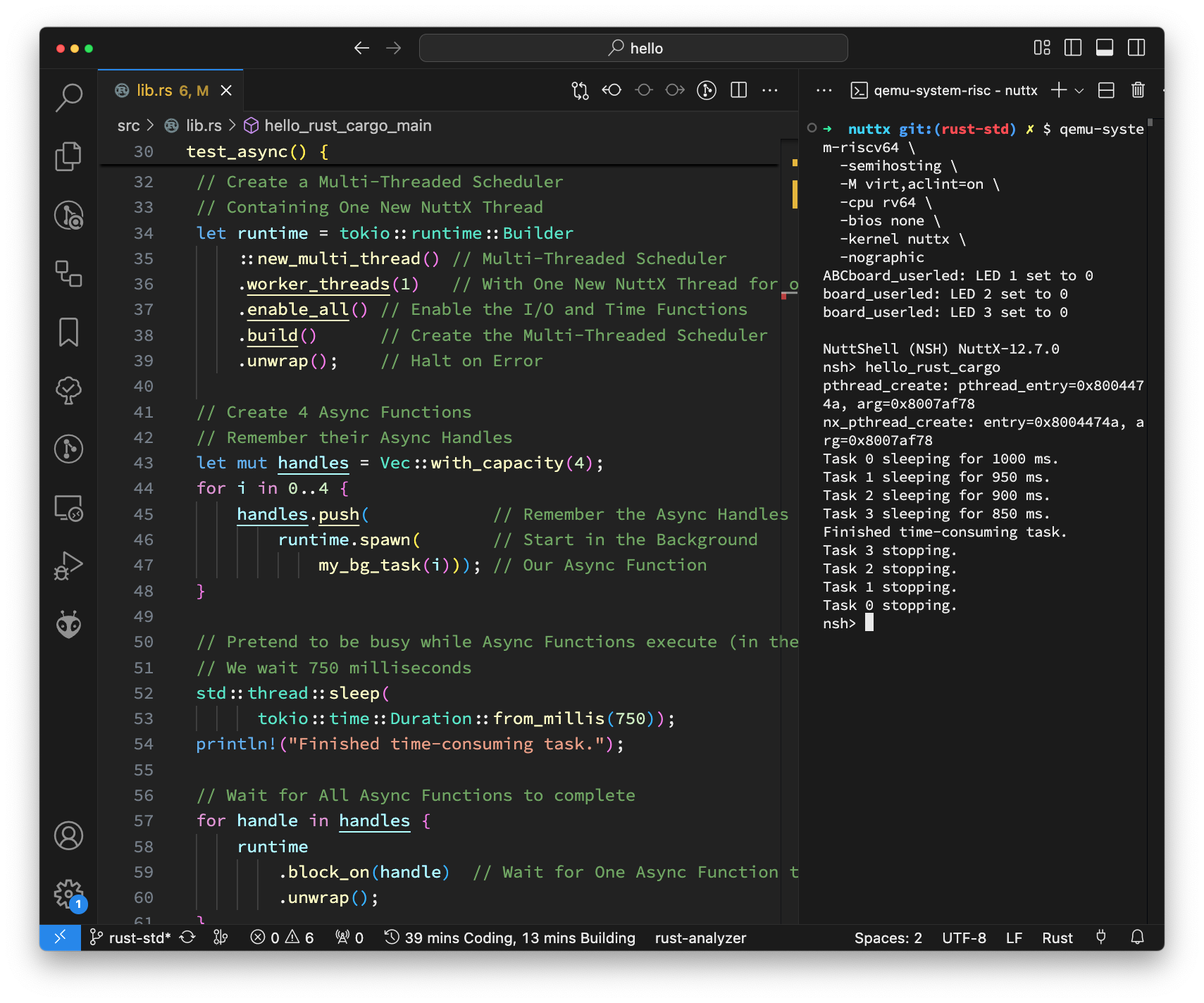

Ah we haven’t seen the full power of Tokio Multi-Threaded Async Functions on NuttX…

nsh> hello_rust_cargo

pthread_create

nx_pthread_create

Task 0 sleeping for 1000 ms

Task 1 sleeping for 950 ms

Task 2 sleeping for 900 ms

Task 3 sleeping for 850 ms

Finished time-consuming task

Task 3 stopping

Task 2 stopping

Task 1 stopping

Task 0 stoppingCheck this link for the Tokio Async Demo. And it works beautifully on NuttX! (Pic below)

NuttX has POSIX Threads. Why use Async Functions?

Think Node.js and its Single-Thread Event Loop, making Non-Blocking I/O Calls. Supporting tens of thousands of concurrent connections. (Without costly Thread Context Switching)

Today we can (probably) do the same with NuttX and Async Rust. Assuming POSIX Async I/O works OK with Tokio.

(Tokio calls them “Async Tasks”, sorry we won’t. Because a Task in NuttX means something else)

How will we use Tokio?

Tokio is designed for I/O-Bound Applications where each individual task spends most of its time waiting for I/O.

Which means it’s great for Network Servers. Instead of spawning many POSIX Threads, we spawn a few threads and call Async Functions.

(Check out Tokio Select and Tokio Streams)

We’re running nix on NuttX?

Oh that’s nix Crate that provides Safer Rust Bindings for POSIX / Unix / Linux. (It’s not NixOS)

This is how we add the library to our Rust Hello App…

$ cd ../apps/examples/rust/hello

$ cargo add nix \

--features fs,ioctl \

--git https://github.com/lupyuen/nix.git \

--branch nuttx

Updating git repository `https://github.com/lupyuen/nix.git`

Adding nix (git) to dependencies

Features: + fs + ioctl

34 deactivated featuresURL looks sus?

Yep it’s our Bespoke nix Crate. That’s because the Official nix Crate doesn’t support NuttX yet. We made a few tweaks to compile on NuttX. (Explained in the Appendix)

Why call nix?

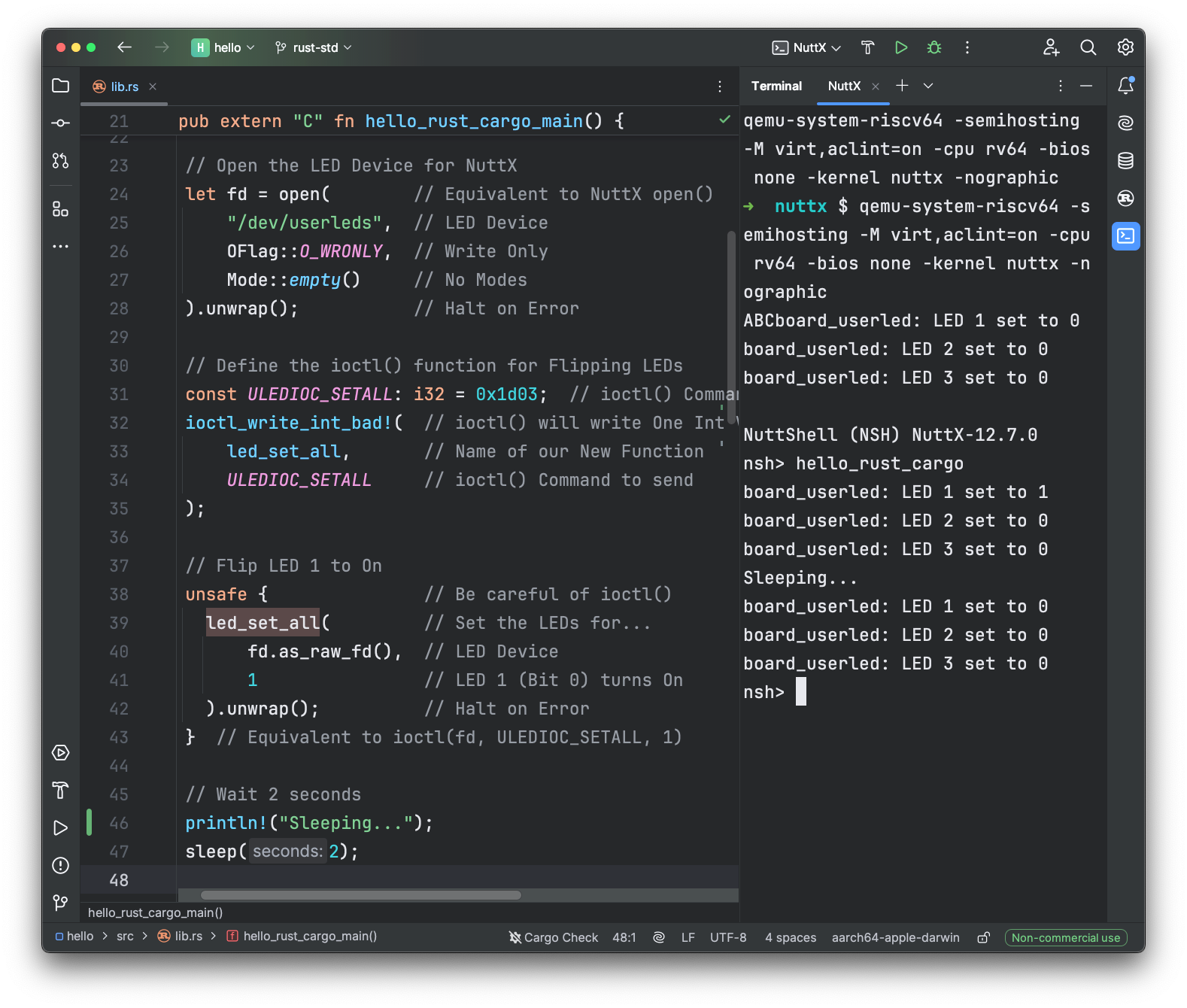

We’re Blinking the LED on NuttX. We could call the POSIX API direcly from Rust…

let fd = unsafe { libc::open("/dev/userleds", ...) };

unsafe { libc::ioctl(fd, ULEDIOC_SETALL, 1); }

unsafe { libc::close(fd); }Though it doesn’t look very… Safe. That’s why we call the Safer POSIX Bindings provided by nix. Like so: wip-nuttx-apps/lib.rs

// Open the LED Device for NuttX

let fd = open( // Equivalent to NuttX open()

"/dev/userleds", // LED Device

OFlag::O_WRONLY, // Write Only

Mode::empty() // No Modes

).unwrap(); // Halt on Error

// Define the ioctl() function for Flipping LEDs

const ULEDIOC_SETALL: i32 = 0x1d03; // ioctl() Command

ioctl_write_int_bad!( // ioctl() will write One Int Value (LED Bit State)

led_set_all, // Name of our New Function

ULEDIOC_SETALL // ioctl() Command to send

);The code above opens the LED Device, returning an Owned File Descriptor (explained below). It defines a function led_set_all, that will call ioctl() to flip the LED.

Here’s how we call led_set_all to flip the LED: lib.rs

// Flip LED 1 to On

unsafe { // Be careful of ioctl()

led_set_all( // Set the LEDs for...

fd.as_raw_fd(), // LED Device

1 // LED 1 (Bit 0) turns On

).unwrap(); // Halt on Error

} // Equivalent to ioctl(fd, ULEDIOC_SETALL, 1)We wait Two Seconds, then flip the LED to Off: lib.rs

// Wait 2 seconds

sleep(2);

// Flip LED 1 to Off: ioctl(fd, ULEDIOC_SETALL, 0)

unsafe { led_set_all(fd.as_raw_fd(), 0).unwrap(); }ULEDIOC_SETALL looks familiar?

We spoke about ULEDIOC_SETALL in an earlier article. And the Rust Code above mirrors the C Version of our Blinky App.

How to run the Rust Blinky App?

Copy the Rust Blinky Files from here…

lupyuen2/wip-nuttx-apps/examples/rust/hello

Specifically: Cargo.toml and src/lib.rs

Overwrite our Rust Hello App…

apps/examples/rust/hello

make -jThen run it with QEMU RISC-V Emulator

$ qemu-system-riscv64 \

-semihosting \

-M virt,aclint=on \

-cpu rv64 \

-bios none \

-kernel nuttx \

-nographic

NuttShell (NSH) NuttX-12.8.0

nsh> hello_rust_cargo

board_userled: LED 1 set to 1

board_userled: LED 1 set to 0NuttX blinks the Emulated LED on QEMU Emulator!

How to code Rust Apps for NuttX?

We could open the apps folder in VSCode, but Rust Analyzer won’t work.

Do this instead: VSCode > File > Open Folder > apps/examples/rust/hello. Then Rust Analyzer will work perfectly.

cargo build seems to work, cargo run won’t. Remember to run cargo clippy…

$ cargo clippy

Checking hello v0.1.0 (apps/examples/rust/hello)

Finished `dev` profile [unoptimized + debuginfo] target(s) in 0.38sLet’s talk about Owned File Descriptors vs Raw File Descriptors…

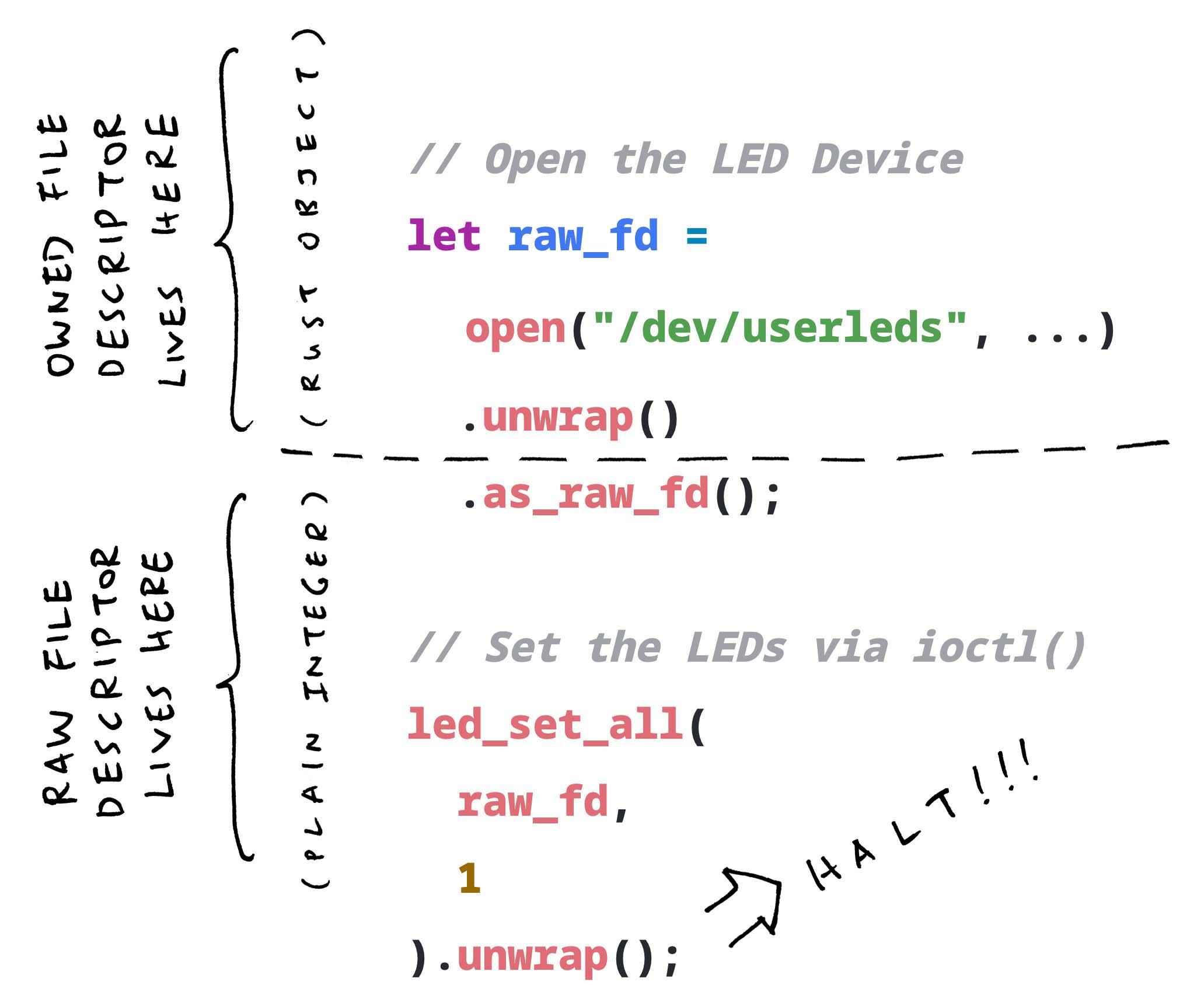

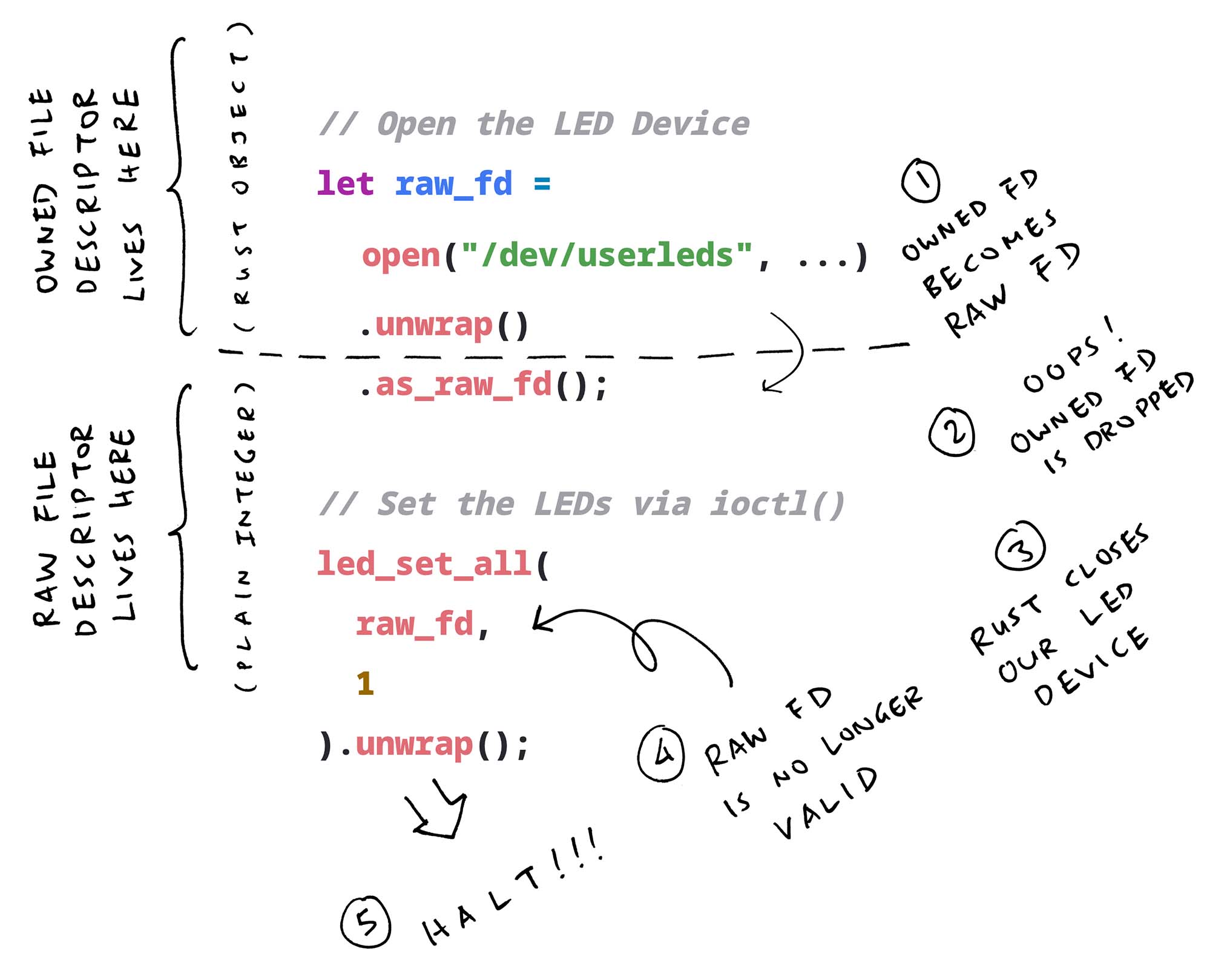

Safety Quiz: Why will this run OK…

// Copied from above: Open the LED Device

let owned_fd =

open("/dev/userleds", ...)

.unwrap(); // Returns an Owned File Descriptor

// Copied from above: Set the LEDs via ioctl()

led_set_all(

owned_fd.as_raw_fd(), // Extract the Raw File Descriptor

1 // Flip LED 1 to On

).unwrap(); // Yep runs OKBut Not This? (Pic above)

// Extract earlier the Raw File Descriptor (from the LED Device)

let raw_fd =

open("/dev/userleds", ...) // Open the LED Device

.unwrap() // Get the Owned File Descriptor

.as_raw_fd(); // Which becomes a Raw File Descriptor

// Set the LEDs via ioctl()

led_set_all(

raw_fd, // Use the earlier Raw File Descriptor

1 // Flip LED 1 to On

).unwrap(); // Oops will fail!The Second Snippet will fail with EBADF Error…

nsh> hello_rust_cargo

thread '<unnamed>' panicked at src/lib.rs:32:33:

called `Result::unwrap()` on an `Err` value: EBADF

note: run with `RUST_BACKTRACE=1` environment variable to display a backtraceThere’s something odd about Raw File Descriptors vs Owned File Descriptors… Fetching the Raw One too early might cause EBADF Errors. Here’s why…

What’s a Raw File Descriptor?

In NuttX and POSIX: Raw File Descriptor is a Plain Integer that specifies an I/O Stream…

| File Descriptor | I/O Stream |

|---|---|

| 0 | Standard Input |

| 1 | Standard Output |

| 2 | Standard Error |

| 3 | /dev/userleds (assuming we opened it) |

What about Owned File Descriptor?

In Rust: Owned File Descriptor is a Rust Object, wrapped around a Raw File Descriptor.

And Rust Objects shall be Automatically Dropped, when they go out of scope. (Unlike Integers)

Causing the Second Snippet to fail?

Exactly! open() returns an Owned File Descriptor…

// Owned File Descriptor becomes Raw File Descriptor

let raw_fd =

open("/dev/userleds", ...) // Open the LED Device

.unwrap() // Get the Owned File Descriptor

.as_raw_fd(); // Which becomes a Raw File DescriptorAnd we turned it into Raw File Descriptor. (The Plain Integer, not the Rust Object)

Oops! Our Owned File Descriptor goes Out Of Scope and gets dropped by Rust…

Thus Rust will helpfully close /dev/userleds. Since it’s closed, our Raw File Descriptor becomes invalid…

// Set the LEDs via ioctl()

led_set_all(

raw_fd, // Use the (closed) Raw File Descriptor

1 // Flip LED 1 to On

).unwrap(); // Oops will fail with EBADF Error!Resulting in the EBADF Error. ioctl() failed because /dev/userleds is already closed!

Lesson Learnt: Be careful with Owned File Descriptors. They’re super helpful for Auto-Closing our files. But might have strange consequences.

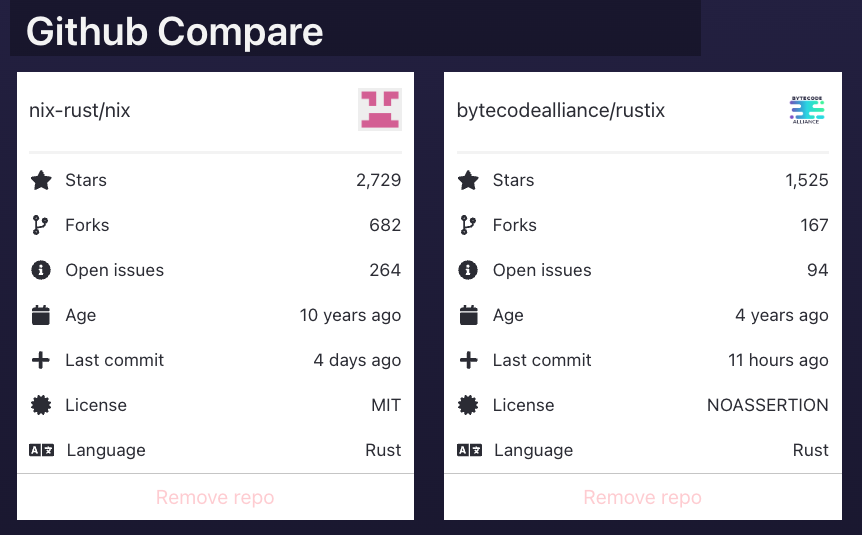

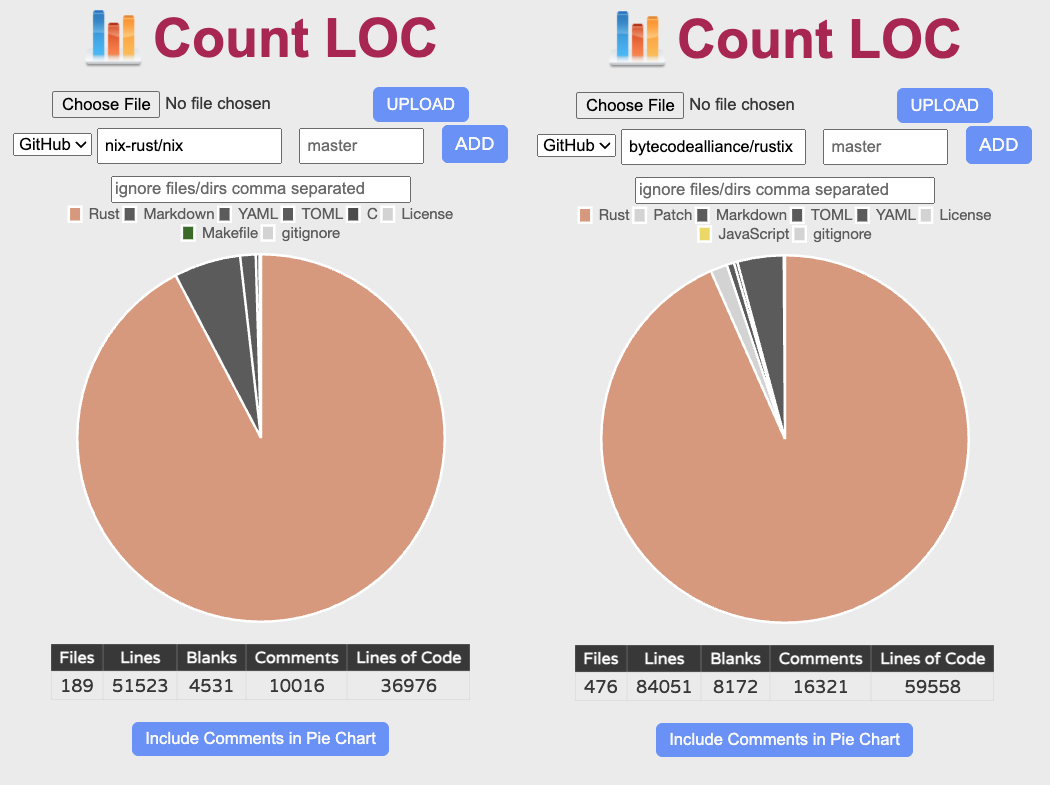

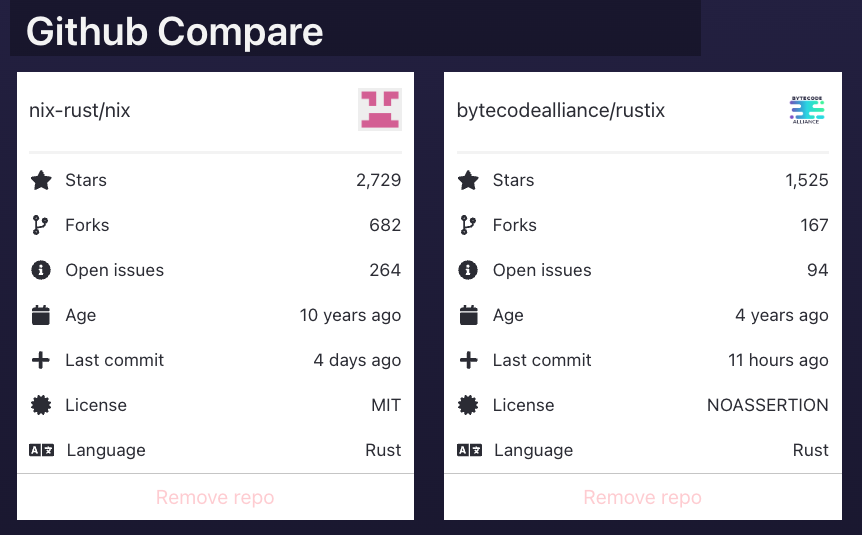

Rustix is another popular POSIX Wrapper. We take a peek…

Is there a Safer Way to call ioctl()?

Calling ioctl() from Rust will surely get messy: It’s an Unsafe Call that might cause bad writes into the NuttX Kernel! (If we’re not careful)

At the top of the article, we saw nix crate calling ioctl(). Now we look at Rustix calling ioctl(): rustix/fs/ioctl.rs

// Let's implement ioctl(fd, BLKSSZGET, &output)

// In Rustix: ioctl() is also unsafe

unsafe {

// Create an "Ioctl Getter"

// That will read data thru ioctl()

let ctl = ioctl::Getter::< // Ioctl Getter has 2 attributes...

ioctl::BadOpcode< // Attribute #1: Ioctl Command Code

{ c::BLKSSZGET } // Which is "Fetch the Logical Block Size of a Block Device"

>,

c::c_uint // Attribute #2: Ioctl Getter will read a uint32_t thru ioctl()

>::new(); // Create the Ioctl Getter

// Now that we have the Ioctl Getter

// We call ioctl() on the File Descriptor

// Equivalent to: ioctl(fd, BLKSSZGET, &output) ; return output

ioctl::ioctl(

fd, // Borrowed File Descriptor (safer than Raw)

ctl // Ioctl Getter

) // Returns the Value Read (Or Error)

}(Rustix Ioctl passes a Borrowed File Descriptor, safer than Raw)

Nix vs Rustix: They feel quite similar?

Actually Nix was previously a lot simpler, supporting only Raw File Descriptors. (Instead of Owned File Descriptors)

Today, Nix is moving to Owned File Descriptors due to I/O Safety. Bummer it means Nix is becoming more Rustix-like…

Rust: I/O Safety (used in Rustix and New Nix)

What’s our preference: Nix or Rustix?

Hmmm we’re still pondering. Rustix is newer (pic above), but it’s also more complex (based on Lines of Code). It might hinder our porting to NuttX.

Which would you choose? Lemme know! 🙏

(Rustix on NuttX: Will it run? Nope not yet)

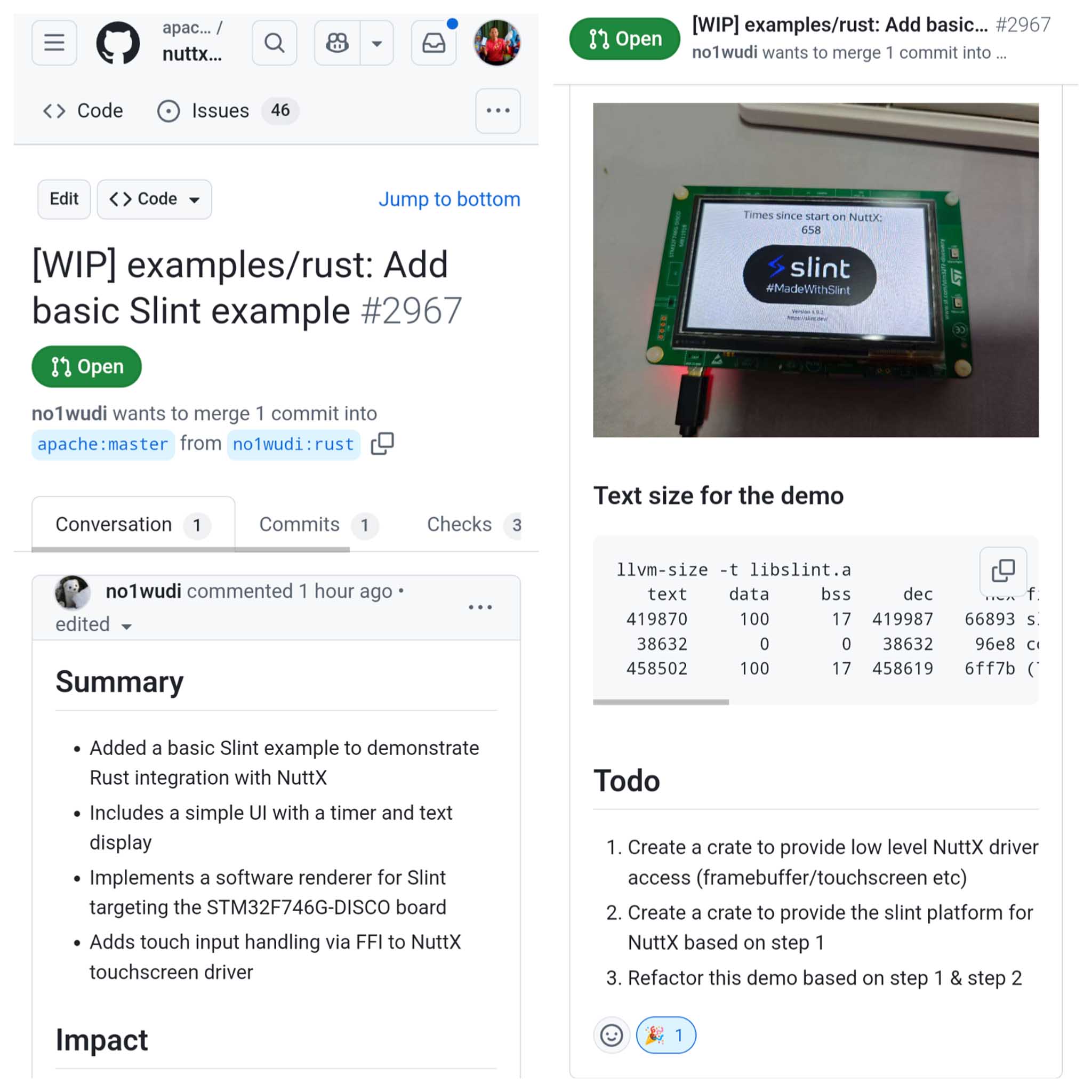

(no1wudi/nuttx-rs shows potential)

(Rust Embedded HAL might be a bad fit)

Upcoming: Slint Rust GUI for NuttX 🎉

What platforms are supported for NuttX + Rust Standard Library? How about SBCs?

Arm and RISC-V (32-bit and 64-bit). Check this doc for updates.

Sorry 64-bit RISC-V Kernel Build is not supported yet. So it won’t run on RISC-V SBCs like Ox64 BL808 and Oz64 SG2000.

Sounds like we need plenty of Rust Testing? For every NuttX Platform?

Yeah maybe we need Daily Automated Testing of NuttX + Rust Standard Library on NuttX Build Farm?

With QEMU Emulator or a Real Device?

And when the Daily Test fails: How to Auto-Rewind the Build and discover the Breaking Commit? Hmmm…

Many Thanks to the awesome NuttX Admins and NuttX Devs! And My Sponsors, for sticking with me all these years.

Got a question, comment or suggestion? Create an Issue or submit a Pull Request here…

Follow these steps to build NuttX bundled with Rust Standard Library…

(Remember to install RISC-V Toolchain and RISC-V QEMU)

## Install Rust: https://rustup.rs/

## Select "Standard Installation"

curl --proto '=https' --tlsv1.2 -sSf https://sh.rustup.rs | sh

. "$HOME/.cargo/env"

## Switch to the Nightly Toolchain

rustup update

rustup toolchain install nightly

rustup default nightly

## Should show `rustc 1.86.0-nightly` or later

rustc --version

## Install the Nightly Toolchain

rustup component add rust-src --toolchain nightly-x86_64-unknown-linux-gnu

## For macOS: rustup component add rust-src --toolchain nightly-aarch64-apple-darwin

## Download the NuttX Kernel and Apps

git clone https://github.com/apache/nuttx

git clone https://github.com/apache/nuttx-apps apps

cd nuttx

## Configure NuttX for RISC-V 64-bit QEMU with LEDs

## (Alternatively: rv-virt:nsh64 or rv-virt:nsh or rv-virt:leds)

tools/configure.sh rv-virt:leds64

## Disable Floating Point: CONFIG_ARCH_FPU

kconfig-tweak --disable CONFIG_ARCH_FPU

## Enable CONFIG_SYSTEM_TIME64 / CONFIG_FS_LARGEFILE / CONFIG_DEV_URANDOM / CONFIG_TLS_NELEM = 16

kconfig-tweak --enable CONFIG_SYSTEM_TIME64

kconfig-tweak --enable CONFIG_FS_LARGEFILE

kconfig-tweak --enable CONFIG_DEV_URANDOM

kconfig-tweak --set-val CONFIG_TLS_NELEM 16

## Enable the Hello Rust Cargo App

## Increase the App Stack Size from 2 KB to 16 KB (especially for 64-bit platforms)

kconfig-tweak --enable CONFIG_EXAMPLES_HELLO_RUST_CARGO

kconfig-tweak --set-val CONFIG_EXAMPLES_HELLO_RUST_CARGO_STACKSIZE 16384

## Update the Kconfig Dependencies

make olddefconfig

## Build NuttX

make -j

## If it fails with "Mismatched Types":

## Patch the file `fs.rs` (see below)

## Start NuttX on QEMU RISC-V 64-bit

qemu-system-riscv64 \

-semihosting \

-M virt,aclint=on \

-cpu rv64 \

-bios none \

-kernel nuttx \

-nographic

## Inside QEMU: Run our Hello Rust App

hello_rust_cargoWe’ll see this in QEMU RISC-V Emulator…

NuttShell (NSH) NuttX-12.8.0

nsh> hello_rust_cargo

{"name":"John","age":30}

{"name":"Jane","age":25}

Deserialized: Alice is 28 years old

Pretty JSON:

{

"name": "Alice",

"age": 28

}

Hello world from tokio!To Quit QEMU: Press Ctrl-a then x

(Also works for 32-bit rv-virt:leds)

Troubleshooting The Rust Build

If NuttX Build fails with “Mismatched Types”…

Compiling std v0.0.0 (.rustup/toolchains/nightly-x86_64-unknown-linux-gnu/lib/rustlib/src/rust/library/std)

error[E0308]: mismatched types

--> .rustup/toolchains/nightly-x86_64-unknown-linux-gnu/lib/rustlib/src/rust/library/std/src/sys/pal/unix/fs.rs:1037:33

1037 | unsafe { CStr::from_ptr(self.entry.d_name.as_ptr()) }

| -------------- ^^^^^^^^^^^^^^^^^^^^^^^^^^ expected `*const u8`, found `*const i8`

| |

| arguments to this function are incorrect

= note: expected raw pointer `*const u8`

found raw pointer `*const i8`

note: associated function defined here

--> .rustup/toolchains/nightly-x86_64-unknown-linux-gnu/lib/rustlib/src/rust/library/core/src/ffi/c_str.rs:264:25

264 | pub const unsafe fn from_ptr<'a>(ptr: *const c_char) -> &'a CStr {

| ^^^^^^^^Then edit this file…

## For Ubuntu

$HOME/.rustup/toolchains/nightly-x86_64-unknown-linux-gnu/lib/rustlib/src/rust/library/std/src/sys/pal/unix/fs.rs

## For macOS

$HOME/.rustup/toolchains/nightly-aarch64-apple-darwin/lib/rustlib/src/rust/library/std/src/sys/pal/unix/fs.rsChange the name_cstr function at Line 1036…

fn name_cstr(&self) -> &CStr {

unsafe { CStr::from_ptr(self.entry.d_name.as_ptr()) }

}To this…

fn name_cstr(&self) -> &CStr {

unsafe { CStr::from_ptr(self.entry.d_name.as_ptr() as *const u8) }

}And verify the change…

## For Ubuntu

head -n 1049 $HOME/.rustup/toolchains/nightly-x86_64-unknown-linux-gnu/lib/rustlib/src/rust/library/std/src/sys/pal/unix/fs.rs \

| tail -n 17

## For macOS

head -n 1049 $HOME/.rustup/toolchains/nightly-aarch64-apple-darwin/lib/rustlib/src/rust/library/std/src/sys/pal/unix/fs.rs \

| tail -n 17

## We should see

## fn name_cstr(&self) -> &CStr {

## unsafe { CStr::from_ptr(self.entry.d_name.as_ptr() as *const u8) }Finally rebuild with make -j

If the build fails with “-Z” Error…

error: the `-Z` flag is only accepted on the nightly channel of Cargo

but this is the `stable` channelThen switch to the Nightly Toolchain…

## Switch to the Nightly Toolchain

rustup update

rustup toolchain install nightly

rustup default nightly

## Should show `rustc 1.86.0-nightly` or later

rustc --versionIf the build fails with “Unable to build with the Standard Library”…

error: ".rustup/toolchains/nightly-x86_64-unknown-linux-gnu/lib/rustlib/src/rust/library/Cargo.lock" does not exist, unable to build with the standard library

try: rustup component add rust-src --toolchain nightly-x86_64-unknown-linux-gnuThen install the Nightly Toolchain…

## Install the Nightly Toolchain

rustup component add rust-src --toolchain nightly-x86_64-unknown-linux-gnu

## For macOS: rustup component add rust-src --toolchain nightly-aarch64-apple-darwinIf the build fails with “Error Loading Target”…

error: Error loading target specification:

Could not find specification for target "riscv64imafdc-unknown-nuttx-elf"Then disable Floating Point…

## Disable Floating Point: CONFIG_ARCH_FPU

kconfig-tweak --disable CONFIG_ARCH_FPU

## Update the Kconfig Dependencies

make olddefconfig

make -jWhat if we’re using Rust already? And we don’t wish to change the Default Toolchain?

Use rustup override to Override the Folder Toolchain. Do it in the Parent Folder of nuttx and apps…

## Set Rust to Nightly Build

## Apply this to the Parent Folder

## So it will work for `nuttx` and `apps`

pushd ..

rustup override list

rustup override set nightly

rustup override list

popdRust App crashes in QEMU?

We might see a Stack Dump that Loops Forever. Or we might see 100% Full for the App Stack…

PID GROUP PRI POLICY TYPE NPX STATE EVENT SIGMASK STACKBASE STACKSIZE USED FILLED COMMAND

3 3 100 RR Task - Running 0000000000000000 0x80071420 1856 1856 100.0%! hello_rust_cargoThen increase the App Stack Size…

## Increase the App Stack Size to 64 KB

kconfig-tweak --set-val \

CONFIG_EXAMPLES_HELLO_RUST_CARGO_STACKSIZE \

65536

## Update the Kconfig Dependencies and rebuild

make olddefconfig

make -jRust Build seems to break sometimes?

We might need to clean up the Rust Target Files, if the Rust Build goes wonky…

## Erase the Rust Build and rebuild

pushd ../apps/examples/rust/hello

cargo clean

popd

make -jHow to code Rust Apps for NuttX?

We could open the apps folder in VSCode, but Rust Analyzer won’t work.

Do this instead: VSCode > File > Open Folder > apps/examples/rust/hello. Then Rust Analyzer will work perfectly. (Pic below)

cargo build seems to work, cargo run won’t. Remember to run cargo clippy…

$ cargo clippy

Checking hello v0.1.0 (apps/examples/rust/hello)

Finished `dev` profile [unoptimized + debuginfo] target(s) in 0.38sHow did we port Rust Standard Library to NuttX? Details here…

Earlier we saw Tokio’s Single-Threaded Scheduler, running on the Current Thread…

// Use One Single Thread (Current Thread)

// To schedule Async Functions

tokio::runtime::Builder

::new_current_thread() // Current Thread is the Single-Threaded Scheduler

.enable_all() // Enable the I/O and Time Functions

.build() // Create the Single-Threaded Scheduler

.unwrap() // Halt on Error

.block_on( // Start the Scheduler

async { // With this Async Code

println!("Hello world from tokio!");

});

// Is it really async? Let's block and find out!

println!("Looping Forever...");

loop {}And it ain’t terribly exciting…

nsh> hello_rust_cargo

Hello world from tokio!

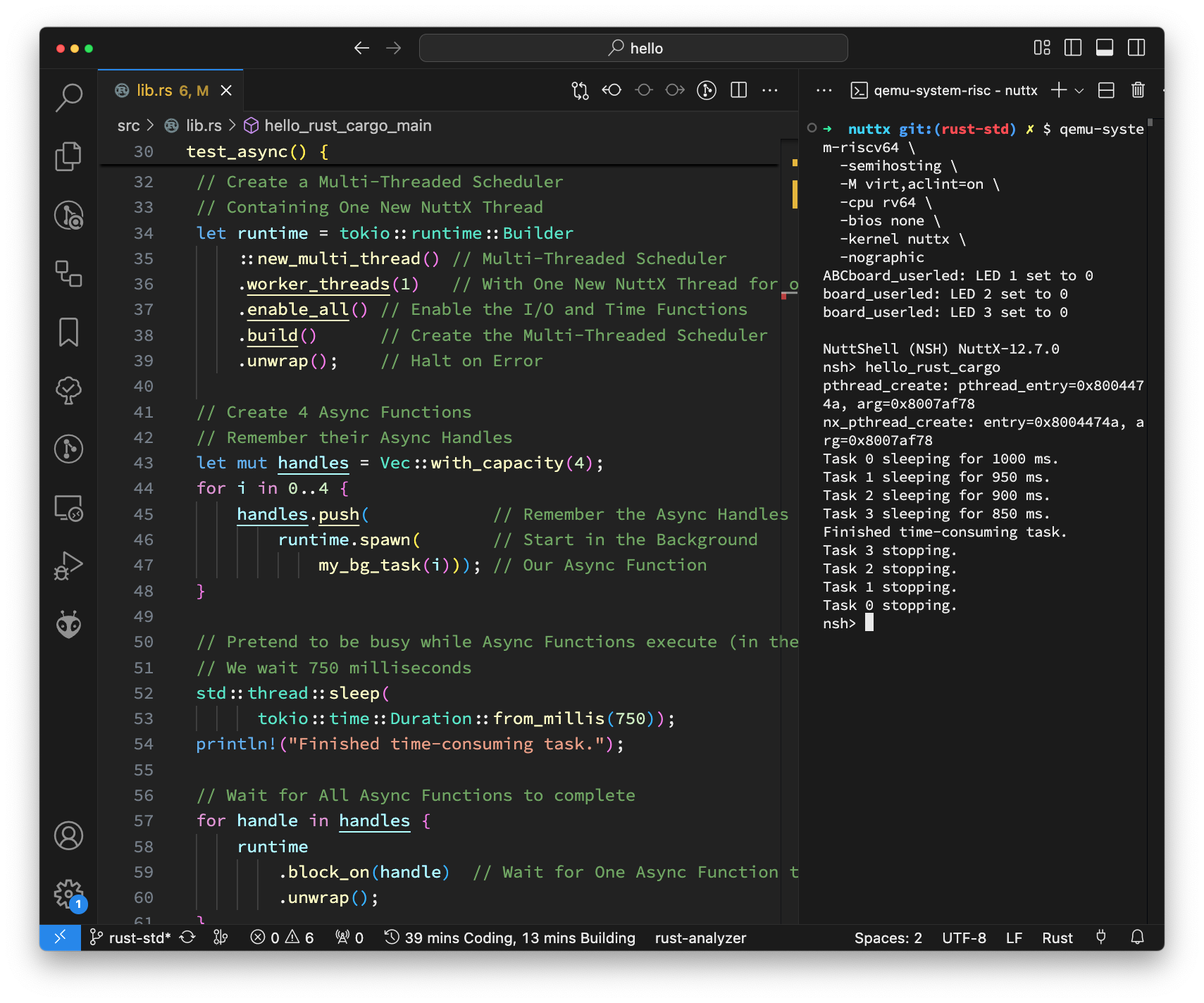

Looping Forever...Now we try Tokio’s Multi-Threaded Scheduler. And we create One New POSIX Thread for the Scheduler: wip-nuttx-apps/lib.rs

// Run 4 Async Functions in the Background

// By creating One New POSIX Thread

// Based on https://tokio.rs/tokio/topics/bridging

fn test_async() {

// Create a Multi-Threaded Scheduler

// Containing One New POSIX Thread

let runtime = tokio::runtime::Builder

::new_multi_thread() // Multi-Threaded Scheduler

.worker_threads(1) // With One New POSIX Thread for our Scheduler

.enable_all() // Enable the I/O and Time Functions

.build() // Create the Multi-Threaded Scheduler

.unwrap(); // Halt on Error

// Create 4 Async Functions

// Remember their Async Handles

let mut handles = Vec::with_capacity(4);

for i in 0..4 {

handles.push( // Remember the Async Handles

runtime.spawn( // Start in the Background

my_bg_task(i))); // Our Async Function

}

// Pretend to be busy while Async Functions execute (in the background)

// We wait 750 milliseconds

std::thread::sleep(

tokio::time::Duration::from_millis(750));

println!("Finished time-consuming task.");

// Wait for All Async Functions to complete

for handle in handles {

runtime

.block_on(handle) // Wait for One Async Function to complete

.unwrap();

}

}

// Our Async Function that runs in the background...

// If i=0: Sleep for 1000 ms

// If i=1: Sleep for 950 ms

// If i=2: Sleep for 900 ms

// If i=3: Sleep for 850 ms

async fn my_bg_task(i: u64) {

let millis = 1000 - 50 * i;

println!("Task {} sleeping for {} ms.", i, millis);

tokio::time::sleep(

tokio::time::Duration::from_millis(millis)

).await; // Wait for sleep to complete

println!("Task {} stopping.", i);

}

// Needed by Tokio Multi-Threaded Scheduler

#[no_mangle]

pub extern "C" fn pthread_set_name_np() {}How to run the Tokio Demo?

Copy the Tokio Demo Files from here…

lupyuen2/wip-nuttx-apps/examples/rust/hello

Specifically: Cargo.toml and src/lib.rs

Overwrite our Rust Hello App…

apps/examples/rust/hello

make -jThen run it with QEMU RISC-V Emulator

$ qemu-system-riscv64 \

-semihosting \

-M virt,aclint=on \

-cpu rv64 \

-bios none \

-kernel nuttx \

-nographic

NuttShell (NSH) NuttX-12.8.0

nsh> hello_rust_cargo

We’ll see Four Async Functions, running on One New POSIX Thread…

nsh> hello_rust_cargo

pthread_create

nx_pthread_create

Task 0 sleeping for 1000 ms

Task 1 sleeping for 950 ms

Task 2 sleeping for 900 ms

Task 3 sleeping for 850 ms

Finished time-consuming task

Task 3 stopping

Task 2 stopping

Task 1 stopping

Task 0 stoppingSee the call to pthread_create, which calls nx_pthread_create? It means that Tokio is actually calling NuttX to create One POSIX Thread! (For the Multi-Threaded Scheduler)

Yep it’s consistent with our Reverse Engineering of Tokio…

What if we increase the Worker Threads? From 1 to 2?

// Two Worker Threads instead of One

let runtime = tokio::runtime::Builder

::new_multi_thread() // New Multi-Threaded Scheduler

.worker_threads(2) // With Two New POSIX Threads for our SchedulerThe output looks exactly the same…

nsh> hello_rust_cargo

pthread_create

nx_pthread_create

pthread_create

nx_pthread_create

Task 0 sleeping for 1000 ms

Task 1 sleeping for 950 ms

Task 2 sleeping for 900 ms

Task 3 sleeping for 850 ms

Finished time-consuming task

Task 3 stopping

Task 2 stopping

Task 1 stopping

Task 0 stoppingExcept that we see Two Calls to pthread_create and nx_pthread_create. Tokio called NuttX to create Two POSIX Threads. (For the Multi-Threaded Scheduler)

How did we log pthread_create?

Inside NuttX Kernel: We added Debug Code to pthread_create and nx_pthread_create

// At https://github.com/apache/nuttx/blob/master/libs/libc/pthread/pthread_create.c#L88

#include <debug.h>

int pthread_create(...) {

_info("pthread_entry=%p, arg=%p", pthread_entry, arg);

// At https://github.com/apache/nuttx/blob/master/sched/pthread/pthread_create.c#L179

#include <debug.h>

int nx_pthread_create(...) {

_info("entry=%p, arg=%p", entry, arg);

What happens when we call nix crate as-is on NuttX?

Earlier we said that we Customised the nix Crate to run on NuttX.

Why? Let’s build our Rust Blinky App with the Original nix Crate…

$ pushd ../apps/examples/rust/hello

$ cargo add nix --features fs,ioctl

Adding nix v0.29.0 to dependencies

Features: + fs + ioctl

33 deactivated features

$ popd

$ make -j

error[E0432]: unresolved import `self::const`

--> errno.rs:19:15

19 | pub use self::consts::*;

| ^^^^^^ could not find `consts` in `self`

error[E0432]: unresolved import `self::Errno`

--> errno.rs:198:15

198 | use self::Errno::*;

| ^^^^^ could not find `Errno` in `self`

error[E0432]: unresolved import `crate::errno::Errno`

--> fcntl.rs:2:5

2 | use crate::errno::Errno;

| ^^^^^^^^^^^^^^-----

| no `Errno` in `errno`Plus many errors. That’s why we Customised the nix Crate for NuttX…

$ cd ../apps/examples/rust/hello

$ cargo add nix \

--features fs,ioctl \

--git https://github.com/lupyuen/nix.git \

--branch nuttx

Updating git repository `https://github.com/lupyuen/nix.git`

Adding nix (git) to dependencies

Features: + fs + ioctl

34 deactivated featuresHere’s how…

For Easier Porting: We cloned nix locally…

git clone \

https://github.com/lupyuen/nix \

--branch nuttx

cd ../apps/examples/rust/hello

cargo add nix \

--features fs,ioctl \

--path $HOME/nixWe extended errno.rs, copying the FreeBSD Section [cfg(target_os = “freebsd”)] to NuttX Section [cfg(target_os = “nuttx”)].

(We removed the bits that don’t exist on NuttX)

NuttX seems to have a similar POSIX Profile to Redox OS? We changed plenty of code to look like this: sys/time.rs

// NuttX works like Redox OS

#[cfg(not(any(target_os = "redox",

target_os = "nuttx")))]

pub const UTIME_OMIT: TimeSpec = ...For NuttX ioctl(): It works more like BSD (second parameter is int) than Linux (second parameter is long): sys/ioctl/mod.rs

// NuttX ioctl() works like BSD

#[cfg(any(bsd,

solarish,

target_os = "haiku",

target_os = "nuttx"))]

#[macro_use]

mod bsd;

// Nope, NuttX ioctl() does NOT work like Linux

#[cfg(any(linux_android,

target_os = "fuchsia",

target_os = "redox"))]

#[macro_use]

mod linux;Here are the files we modified for NuttX…

(Supporting fs and ioctl features only)

Troubleshooting nix ioctl() on NuttX

To figure out if nix passes ioctl() parameters correctly to NuttX: We insert Ioctl Debug Code into NuttX Kernel…

// At https://github.com/apache/nuttx/blob/master/fs/vfs/fs_ioctl.c#L261

#include <debug.h>

int ioctl(int fd, int req, ...) {

_info("fd=0x%x, req=0x%x", fd, req);Which Ioctl Macro shall we call in nix? We tried ioctl_none…

const ULEDIOC_SETALL: i32 = 0x1d03;

ioctl_none!(led_on, ULEDIOC_SETALL, 1);

unsafe { led_on(fd).unwrap(); }But the Ioctl Command Code got mangled up (0x201d0301 should be 0x1d03)

NuttShell (NSH) NuttX-12.8.0

nsh> hello_rust_cargo

fd=3

ioctl: fd=0x3, req=0x201d0301

thread '<unnamed>' panicked at src/lib.rs:31:25:

called `Result::unwrap()` on an `Err` value: ENOTTY

note: run with `RUST_BACKTRACE=1` environment variable to display a backtraceThen we tried ioctl_write_int…

const ULEDIOC_SETALL: i32 = 0x1d03;

ioctl_write_int!(led_on, ULEDIOC_SETALL, 1);

unsafe { led_on(fd, 1).unwrap(); }Nope the Ioctl Command Code is still mangled (0x801d0301 should be 0x1d03)

nsh> hello_rust_cargo

ioctl: fd=0x3, req=0x801d0301

thread '<unnamed>' panicked at src/lib.rs:30:28:

called `Result::unwrap()` on an `Err` value: ENOTTYFinally this works: ioctl_write_int_bad…

const ULEDIOC_SETALL: i32 = 0x1d03;

ioctl_write_int_bad!(led_set_all, ULEDIOC_SETALL);

// Equivalent to ioctl(fd, ULEDIOC_SETALL, 1)

unsafe { led_set_all(fd, 1).unwrap(); }

// Equivalent to ioctl(fd, ULEDIOC_SETALL, 0)

unsafe { led_set_all(fd, 0).unwrap(); }Ioctl Command Code 0x1d03 is hunky dory yay!

NuttShell (NSH) NuttX-12.8.0

nsh> hello_rust_cargo

fd=3

ioctl: fd=0x3, req=0x1d03

board_userled: LED 1 set to 1

board_userled: LED 2 set to 0

board_userled: LED 3 set to 0

ioctl: fd=0x3, req=0x1d03

board_userled: LED 1 set to 0

board_userled: LED 2 set to 0

board_userled: LED 3 set to 0

Will Rustix run on NuttX?

Nope not yet…

$ cd ../apps/examples/rust/hello

$ cargo add rustix \

--features fs \

--git https://github.com/lupyuen/rustix.git \

--branch nuttx

Updating git repository `https://github.com/lupyuen/rustix.git`

Adding rustix (git) to dependencies

Features: + alloc + fs + std + use-libc-auxv

29 deactivated featuresWe tried compiling this code…

#[no_mangle]

pub extern "C" fn hello_rust_cargo_main() {

use rustix::fs::{Mode, OFlags};

let file = rustix::fs::open(

"/dev/userleds",

OFlags::WRONLY,

Mode::empty(),

)

.unwrap();

println!("file={file:?}");

}But it fails…

error[E0432]: unresolved import `libc::strerror_r`

--> .cargo/registry/src/index.crates.io-1949cf8c6b5b557f/errno-0.3.10/src/unix.rs:16:33

|

16 | use libc::{self, c_int, size_t, strerror_r, strlen};

| ^^^^^^^^^^

| |

| no `strerror_r` in the root

| help: a similar name exists in the module: `strerror`Seems we need to fix libc::strerror_r for NuttX? Or maybe the errno crate.

In this section, we discover how Tokio works under the hood. Does it really call POSIX Functions in NuttX?

First we obtain the RISC-V Disassembly of our NuttX Image, bundled with the Hello Rust App. We trace the NuttX Build: make V=1

make distclean

tools/configure.sh rv-virt:leds64

## Disable CONFIG_ARCH_FPU

kconfig-tweak --disable CONFIG_ARCH_FPU

## Enable CONFIG_SYSTEM_TIME64 / CONFIG_FS_LARGEFILE / CONFIG_DEV_URANDOM / CONFIG_TLS_NELEM = 16

kconfig-tweak --enable CONFIG_SYSTEM_TIME64

kconfig-tweak --enable CONFIG_FS_LARGEFILE

kconfig-tweak --enable CONFIG_DEV_URANDOM

kconfig-tweak --set-val CONFIG_TLS_NELEM 16

## Enable Hello Rust Cargo App, increase the Stack Size

kconfig-tweak --enable CONFIG_EXAMPLES_HELLO_RUST_CARGO

kconfig-tweak --set-val CONFIG_EXAMPLES_HELLO_RUST_CARGO_STACKSIZE 16384

## Update the Kconfig Dependencies

make olddefconfig

## Build NuttX with Tracing Enabled

make V=1According to the Make Trace: NuttX Build does this…

## Discard the Rust Debug Symbols

cd apps/examples/rust/hello

cargo build \

--release \

-Zbuild-std=std,panic_abort \

--manifest-path apps/examples/rust/hello/Cargo.toml \

--target riscv64imac-unknown-nuttx-elf

## Generate the Linker Script

riscv-none-elf-gcc \

-E \

-P \

-x c \

-isystem nuttx/include \

-D__NuttX__ \

-DNDEBUG \

-D__KERNEL__ \

-I nuttx/arch/risc-v/src/chip \

-I nuttx/arch/risc-v/src/common \

-I nuttx/sched \

nuttx/boards/risc-v/qemu-rv/rv-virt/scripts/ld.script \

-o nuttx/boards/risc-v/qemu-rv/rv-virt/scripts/ld.script.tmp

## Link Rust App into NuttX

riscv-none-elf-ld \

--entry=__start \

-melf64lriscv \

--gc-sections \

-nostdlib \

--cref \

-Map=nuttx/nuttx.map \

--print-memory-usage \

-Tnuttx/boards/risc-v/qemu-rv/rv-virt/scripts/ld.script.tmp \

-L nuttx/staging \

-L nuttx/arch/risc-v/src/board \

-o nuttx/nuttx \

--start-group \

-lsched \

-ldrivers \

-lboards \

-lc \

-lmm \

-larch \

-lm \

-lapps \

-lfs \

-lbinfmt \

-lboard xpack-riscv-none-elf-gcc-13.2.0-2/lib/gcc/riscv-none-elf/13.2.0/rv64imac/lp64/libgcc.a apps/examples/rust/hello/target/riscv64imac-unknown-nuttx-elf/release/libhello.a \

--end-groupAh NuttX Build calls cargo build --release, stripping the Debug Symbols. We change it to cargo build and dump the RISC-V Disassembly…

## Preserve the Rust Debug Symbols

pushd ../apps/examples/rust/hello

cargo build \

-Zbuild-std=std,panic_abort \

--manifest-path apps/examples/rust/hello/Cargo.toml \

--target riscv64imac-unknown-nuttx-elf

popd

## Generate the Linker Script

riscv-none-elf-gcc \

-E \

-P \

-x c \

-isystem nuttx/include \

-D__NuttX__ \

-DNDEBUG \

-D__KERNEL__ \

-I nuttx/arch/risc-v/src/chip \

-I nuttx/arch/risc-v/src/common \

-I nuttx/sched \

nuttx/boards/risc-v/qemu-rv/rv-virt/scripts/ld.script \

-o nuttx/boards/risc-v/qemu-rv/rv-virt/scripts/ld.script.tmp

## Link Rust App into NuttX

riscv-none-elf-ld \

--entry=__start \

-melf64lriscv \

--gc-sections \

-nostdlib \

--cref \

-Map=nuttx/nuttx.map \

--print-memory-usage \

-Tnuttx/boards/risc-v/qemu-rv/rv-virt/scripts/ld.script.tmp \

-L nuttx/staging \

-L nuttx/arch/risc-v/src/board \

-o nuttx/nuttx \

--start-group \

-lsched \

-ldrivers \

-lboards \

-lc \

-lmm \

-larch \

-lm \

-lapps \

-lfs \

-lbinfmt \

-lboard xpack-riscv-none-elf-gcc-13.2.0-2/lib/gcc/riscv-none-elf/13.2.0/rv64imac/lp64/libgcc.a apps/examples/rust/hello/target/riscv64imac-unknown-nuttx-elf/debug/libhello.a \

--end-group

## Dump the disassembly to nuttx.S

riscv-none-elf-objdump \

--syms --source --reloc --demangle --line-numbers --wide \

--debugging \

nuttx \

>leds64-debug-nuttx.S \

2>&1Which produces the Complete NuttX Disassembly: leds64-debug-nuttx.S

Whoa the Complete NuttX Disassembly is too huge to inspect!

Let’s dump the RISC-V Disassembly of the Rust Part only: libhello.a

## Dump the libhello.a disassembly to libhello.S

riscv-none-elf-objdump \

--syms --source --reloc --demangle --line-numbers --wide \

--debugging \

apps/examples/rust/hello/target/riscv64imac-unknown-nuttx-elf/debug/libhello.a \

>libhello.S \

2>&1Which produces the (much smaller) Rust Disassembly: libhello.S

Is Tokio calling NuttX to create POSIX Threads? We search libhello.S for pthread_create…

.rustup/toolchains/nightly-x86_64-unknown-linux-gnu/lib/rustlib/src/rust/library/std/src/sys/pal/unix/thread.rs:85

let ret = libc::pthread_create(&mut native, &attr, thread_start, p as *mut _);

auipc a0, 0x0 122: R_RISCV_PCREL_HI20 std::sys::pal::unix::thread::Thread::new::thread_start

mv a2, a0 126: R_RISCV_PCREL_LO12_I .Lpcrel_hi254

add a0, sp, 132

add a1, sp, 136

sd a1, 48(sp)

auipc ra, 0x0 130: R_RISCV_CALL_PLT pthread_createOK that’s the Rust Standard Library calling pthread_create to create a new Rust Thread.

How are Rust Threads created in Rust Standard Library? Like this: std/thread/mod.rs

// spawn_unchecked_ creates a new Rust Thread

unsafe fn spawn_unchecked_<'scope, F, T>(

let my_thread = Thread::new(id, name);And spawn_unchecked is called by Tokio, according to our Rust Disassembly…

<core::ptr::drop_in_place<std::thread::Builder::spawn_unchecked_::MaybeDangling<tokio::runtime::blocking::pool::Spawner::spawn_thread::{{closure}}>>>:

.rustup/toolchains/nightly-x86_64-unknown-linux-gnu/lib/rustlib/src/rust/library/core/src/ptr/mod.rs:523

add sp, sp, -16

sd ra, 8(sp)

sd a0, 0(sp)

auipc ra, 0x0 6: R_RISCV_CALL_PLT <std::thread::Builder::spawn_unchecked_::MaybeDangling<T> as core::ops::drop::Drop>::dropYep it checks out: Tokio calls Rust Standard Library, which calls NuttX to create POSIX Threads!

Are we sure that Tokio creates a POSIX Thread? Not a NuttX Task?

We run hello_rust_cargo & to put it in the background…

nsh> hello_rust_cargo &

Hello world from tokio!

nsh> ps

PID GROUP PRI POLICY TYPE NPX STATE EVENT SIGMASK STACK USED FILLED COMMAND

0 0 0 FIFO Kthread - Ready 0000000000000000 0001904 0000712 37.3% Idle_Task

2 2 100 RR Task - Running 0000000000000000 0002888 0002472 85.5%! nsh_main

4 4 100 RR Task - Ready 0000000000000000 0007992 0006904 86.3%! hello_rust_cargops says that there’s only One Single NuttX Task hello_rust_cargo. And no other NuttX Tasks.