📝 5 Jan 2025

“Because I’m bad, I’m bad, shamone

(bad, bad, really, really bad)

You know I’m bad, I’m bad (bad, bad) …

And the whole world has to answer right now

Just to tell you once again

Who’s bad?”

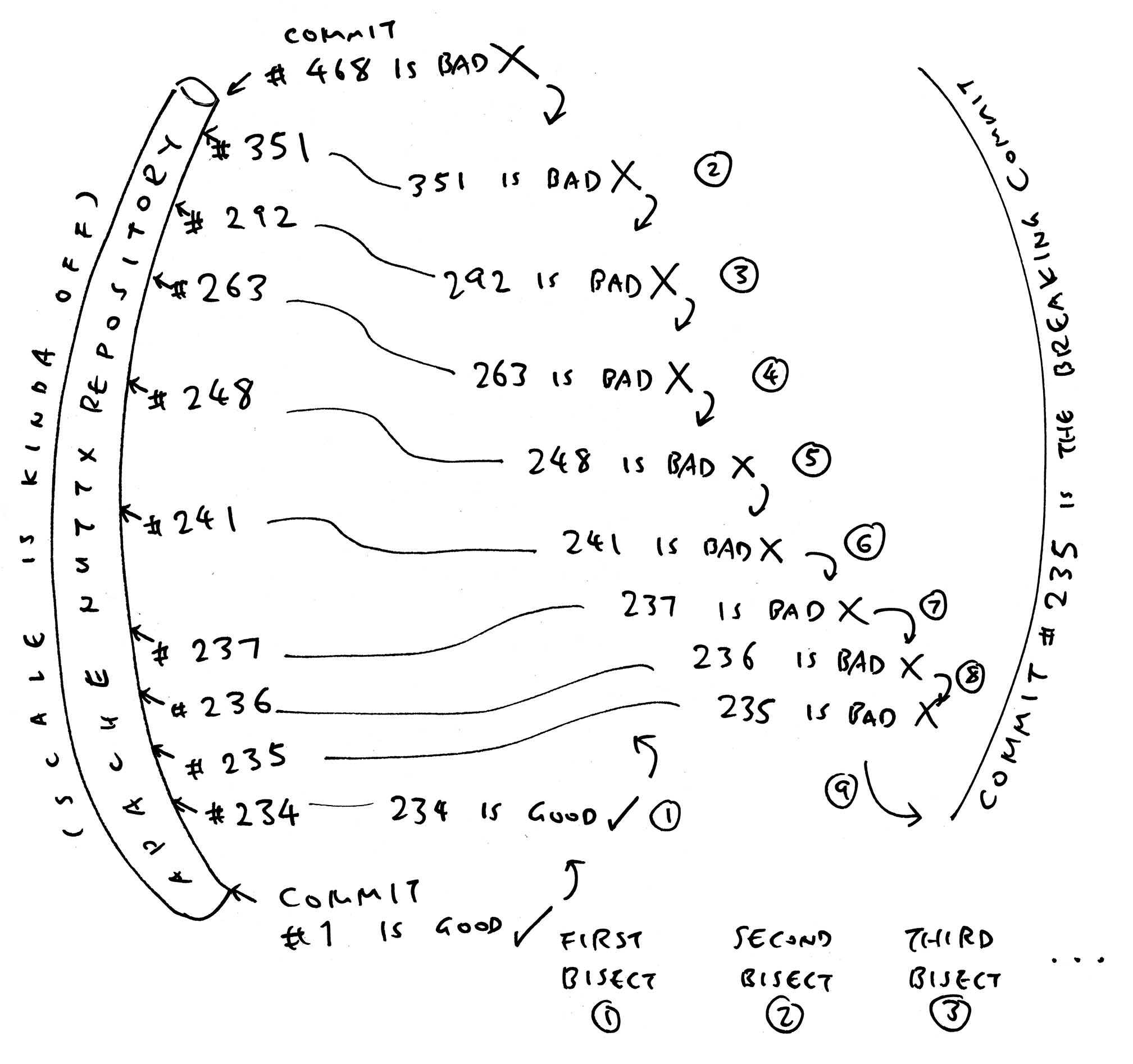

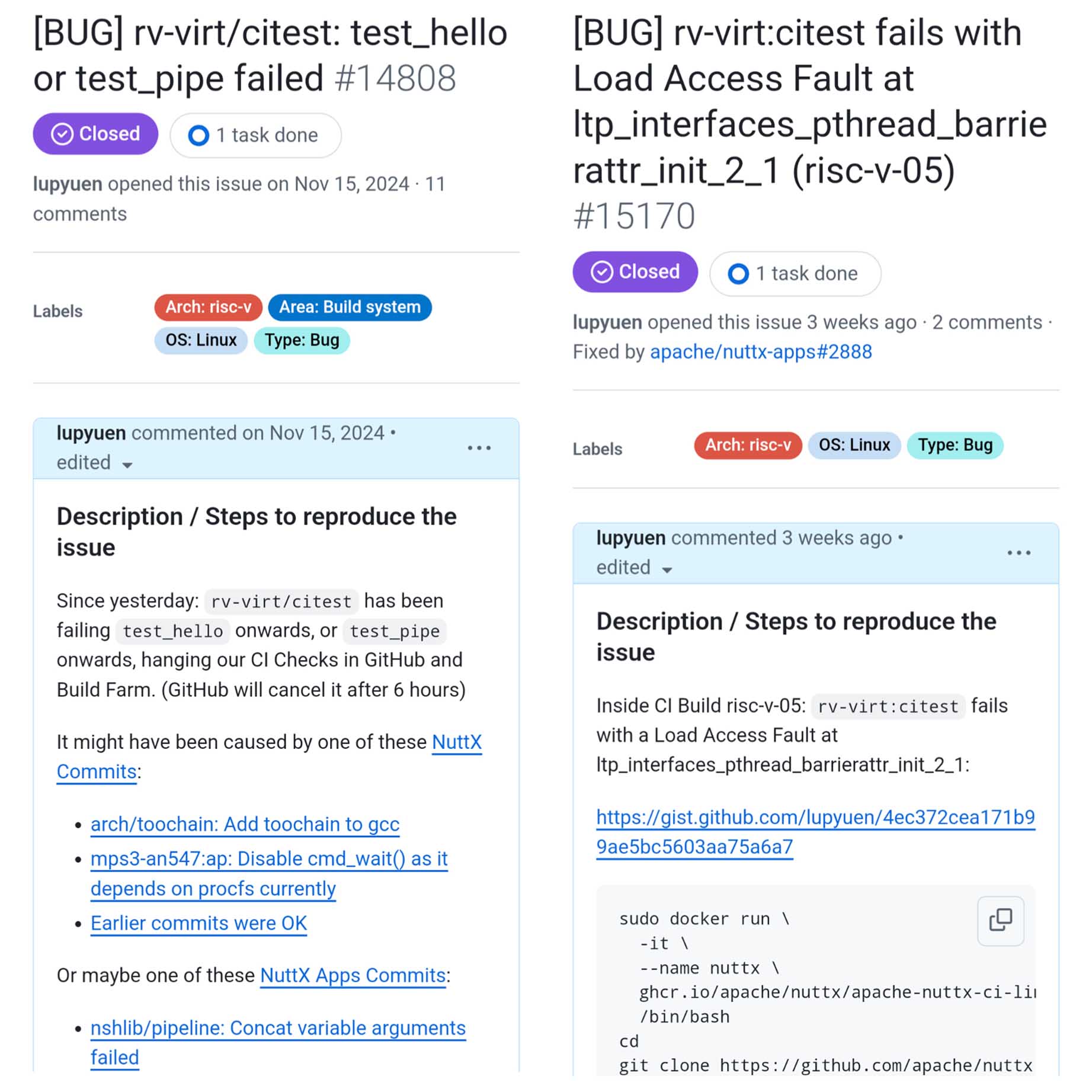

2 Weeks Ago: We saw a Runtime Bug in Apache NuttX RTOS. We think that the Breaking Commit (causing the bug) falls somewhere between these “Good” and “Bad” Commits…

| Commit #1 is Good 2024-11-14 | NuttX runs OK 6554ed4 |

| [ many commits ] | … |

| Commit #468 is Bad 2024-12-04 | NuttX hits Error 79a1ebb |

That’s 468 Commits. Which one is the Breaking Commit?

Maybe we Rewind Each Commit and test?

With a script, we could rewind and retest 468 Commits for Compile Errors. But it’s probably too slow for Runtime Errors. (Rewind + Recompile + Rerun)

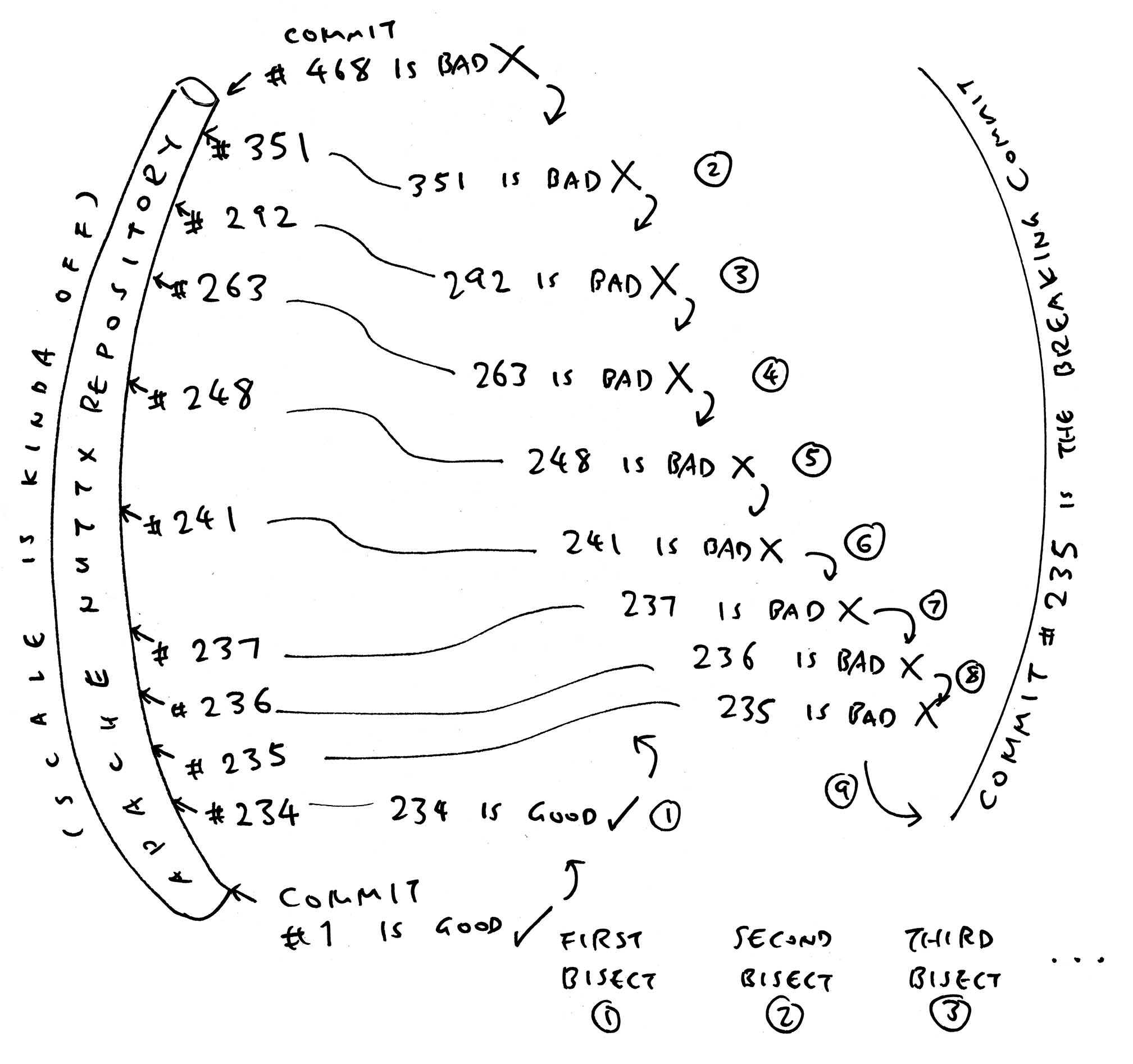

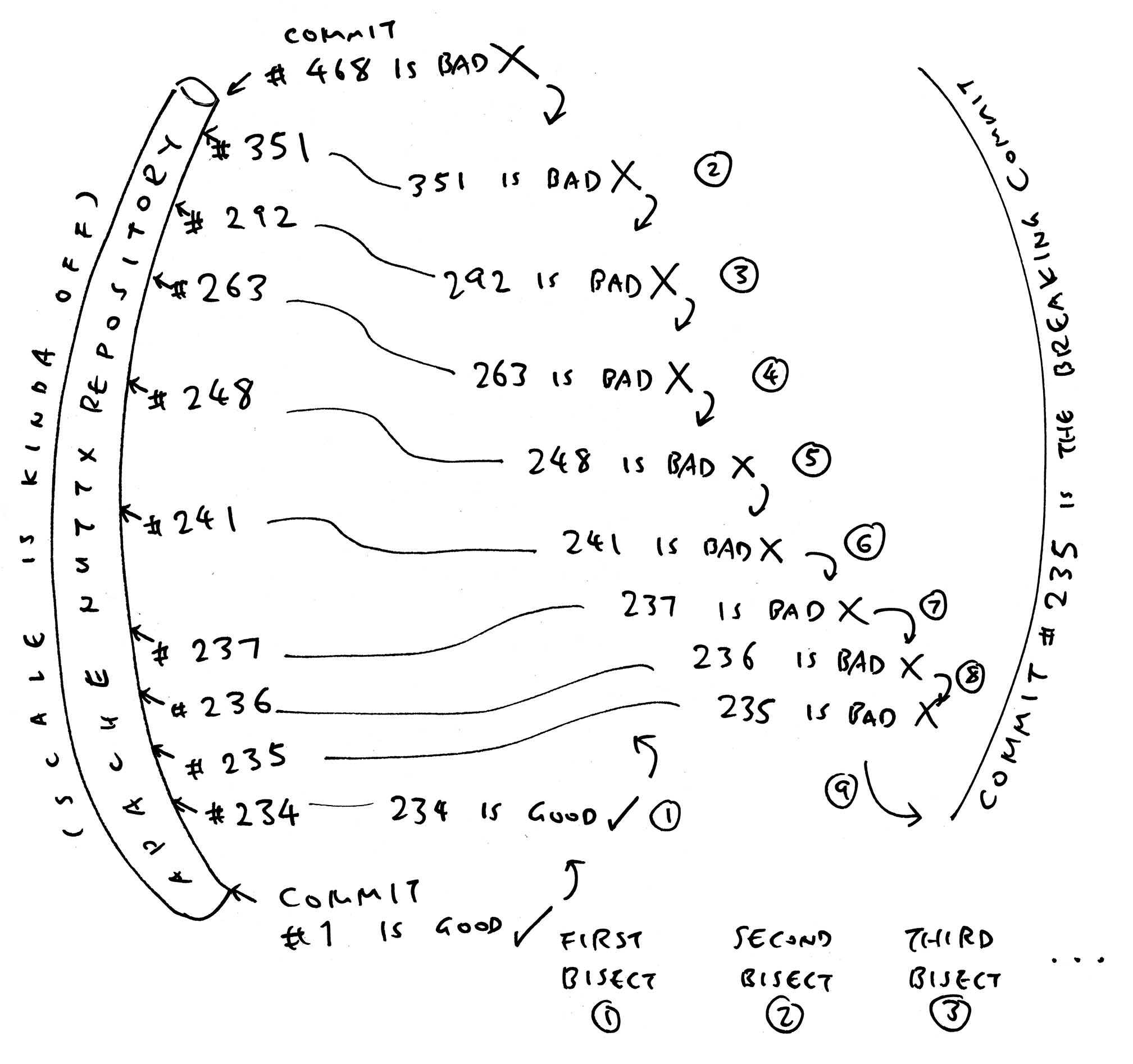

We have a quicker way: Git Bisect! (Pic above)

What’s this Git Bisect?

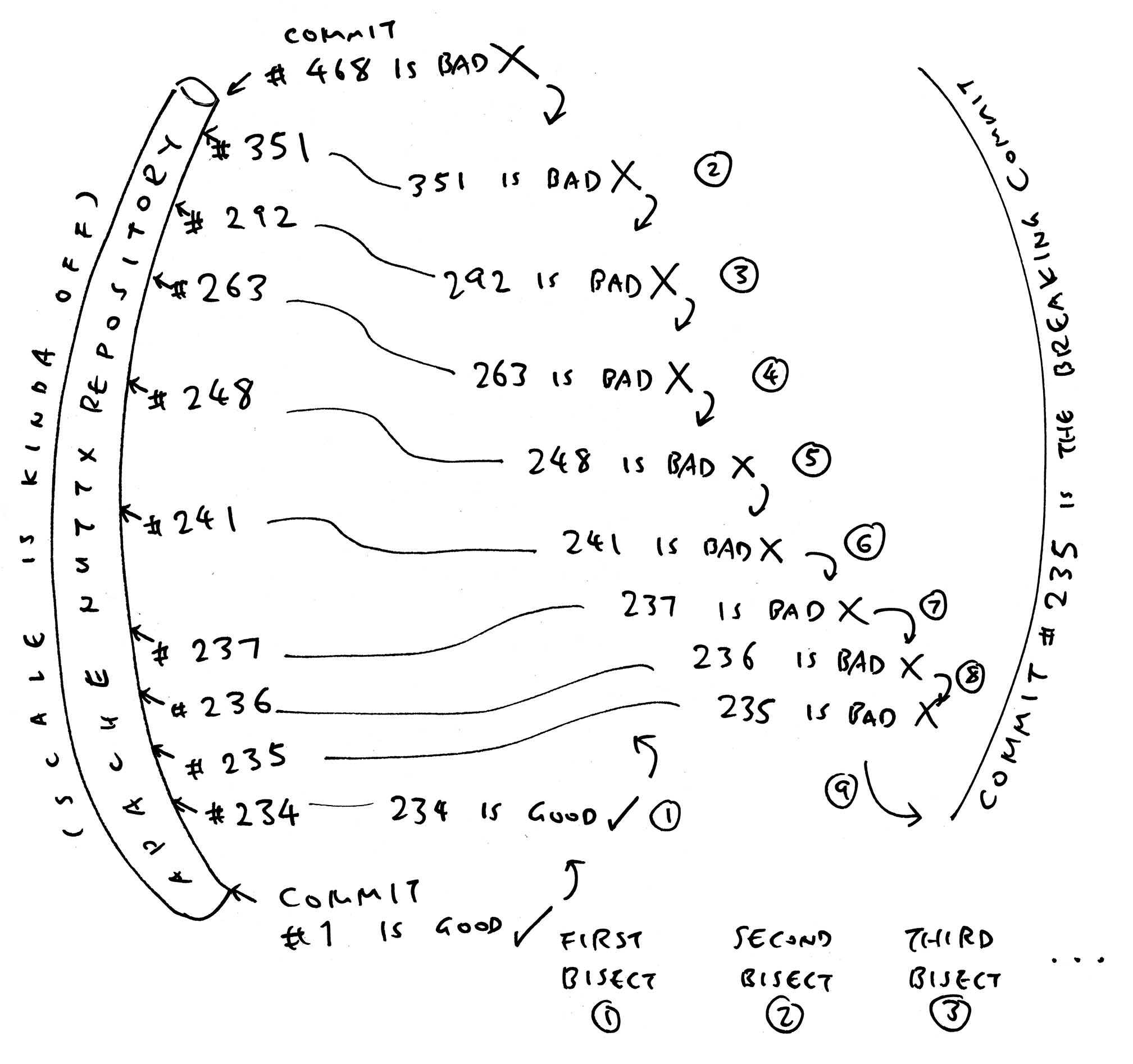

Remember Binary Chop?

“I’m thinking of A Number

Guess My Number!

It’s from 1 to 468

Ask me 9 Yes-No Questions”

To solve this, we Divide And Conquer: Is 234 too high? (no) Is 351 too high? (yes) Is 292 too high (yes)…

Git Bisect works the same way, but for Git Commits…

Our Breaking Commit is one of 468 Commits

Git Bisect shall Pick the Middle Commit and ask: “Is this a Good Commit or Bad Commit?”

Repeat until it discovers the Breaking Commit (in 9 steps)

Is it automated?

Yep Git Bisect will gleefully seek the Breaking Commit on its own… Assuming that we provide a Script to Assess the Goodness / Badness of a NuttX Commit: my-test-script.sh

## This script will be called by Git Bisect

## In Case of Error: Return the error to Git Bisect

set -e

## Get the NuttX Hash (Commit ID)

nuttx_hash=$(git rev-parse HEAD)

## For the NuttX Commit:

## We Build, Run and Test the NuttX Commit...

## make distclean || true

## tools/configure.sh ox64:nsh

## make -j

## But for now: We randomly simulate OK or Error

random_0_or_1=$(( $RANDOM % 2 ))

if [[ "$random_0_or_1" == "0" ]]; then

exit 0 ## Simulate OK

else

exit 1 ## Simulate Error

fi

## Beware: Don't return Exit Codes above 124!

## https://git-scm.com/docs/git-bisect#_bisect_runThis is how we start our Simulated Git Bisect: run.sh

## Download the NuttX Repo and NuttX Apps

git clone https://github.com/apache/nuttx

git clone https://github.com/apache/nuttx-apps apps

cd nuttx

## Tell Git Bisect the Good and Bad Commits

## (Or specify HEAD for the Latest Commit)

git bisect start

git bisect good 6554ed4 ## Commit #1 is Good

git bisect bad 79a1ebb ## Commit #468 is Bad

## Bisect with our Simulated Test Script

git bisect run \

$HOME/nuttx-bisect/my-test-script.sh

## Commit #235 is the Breaking Commit:

## 74bac56 is the first bad commitThat was quick! We break it down…

What just happened in our Simulated Git Bisect?

Git Bisect picked the Middle Commit #234…

## Testing Commit #234 (94a2ce3)

$HOME/nuttx-bisect/my-test-script.sh

nuttx_hash=94a2ce3

## Our Script simulates a successful test

exit 0 ## Simulate OKAnd discovered that Commit #234 is Good. (Via our Simulated Script)

Then it continued the Simulated Bisecting (coincidentally, all bad)

## Commit #351 is Bad

nuttx_hash=1cfaff0

exit 1 ## Simulate Error

## Commit #292 is Bad

nuttx_hash=65a93e9

exit 1 ## Simulate Error

## Commit #263 is Bad

nuttx_hash=1e265af

exit 1 ## Simulate Error

## Commit #248 is Bad

nuttx_hash=c70f3e3

exit 1 ## Simulate Error

## Commit #241 is Bad

nuttx_hash=5d86bee

exit 1 ## Simulate Error

## Commit #237 is Bad

nuttx_hash=e7c2e7c

exit 1 ## Simulate Error

## Commit #236 is Bad

nuttx_hash=68d47ee

exit 1 ## Simulate Error

## Commit #235 is Bad

nuttx_hash=74bac56

exit 1 ## Simulate ErrorFinally deducing that Commit #235 is the Breaking Commit

## Commit #235 is the Breaking Commit

74bac56 is the first bad commitThis works fine for our Simulated Git Bisect. Now we do it for real…

(OK maybe it’s no coincidence)

Will Git Bisect work for Real-Life NuttX?

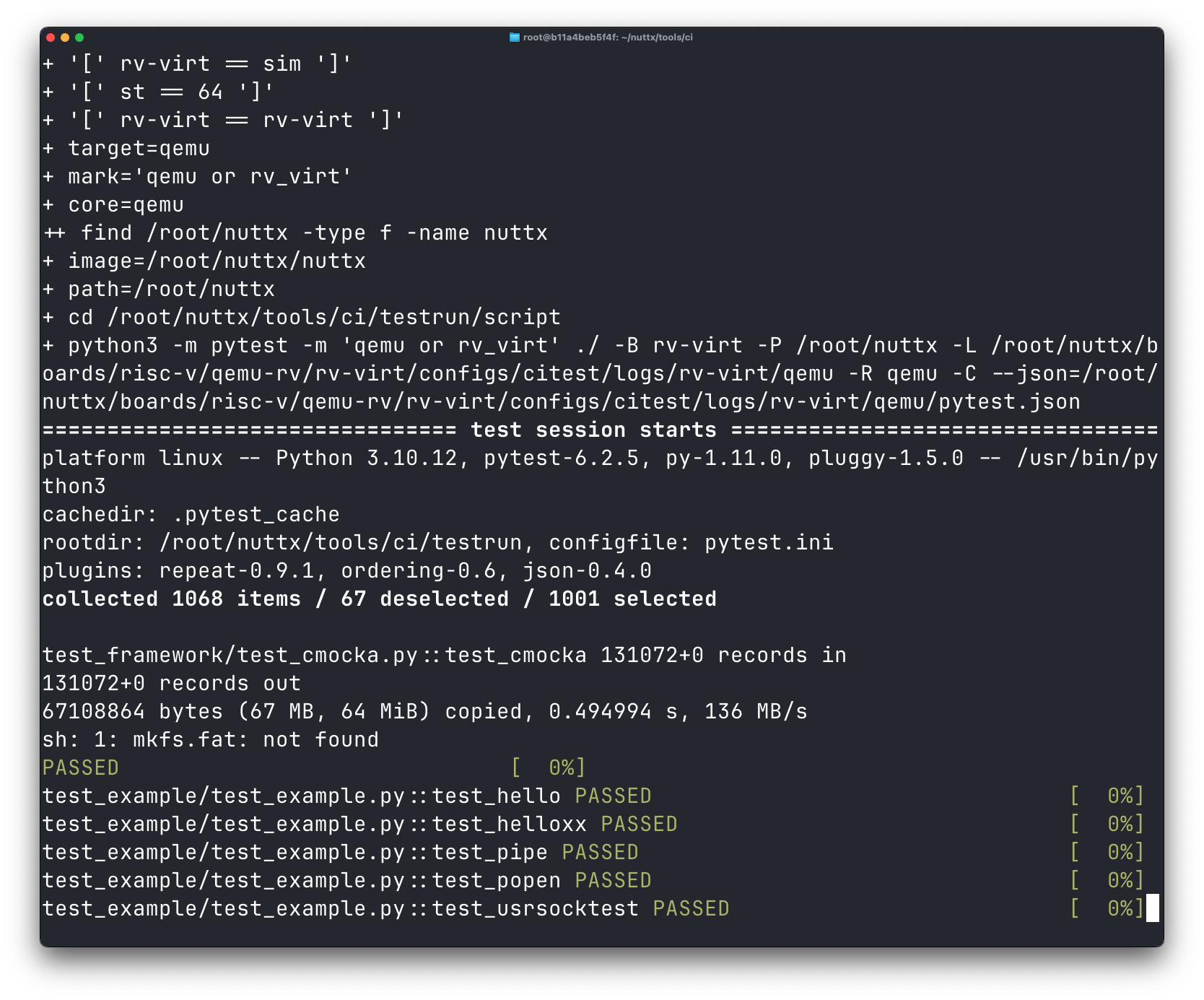

From our Bug Report: NuttX fails the Continuous Integration Test (CI Test) for RISC-V QEMU…

Configuration/Tool: rv-virt/citest

test_cmocka PASSED

test_hello PASSED

test_helloxx FAILED

test_pipe FAILED

test_usrsocktest FAILED

[...Failing all the way...]This happens inside the CI Job risc-v-05. Which we can reproduce with Docker Engine: run-job-bisect.sh

## TODO: Install Docker Engine

## https://docs.docker.com/engine/install/ubuntu/

## Assume we're running risc-v-05 with

## Latest NuttX Repo and NuttX Apps

job=risc-v-05

nuttx_hash=HEAD

apps_hash=HEAD

## Run the CI Job in Docker Container

## If CI Test Hangs: Kill it after 1 hour

sudo docker run -it \

ghcr.io/apache/nuttx/apache-nuttx-ci-linux:latest \

/bin/bash -c "

set -e ;

set -x ;

uname -a ;

cd ;

pwd ;

git clone https://github.com/apache/nuttx ;

git clone https://github.com/apache/nuttx-apps apps ;

echo Building nuttx @ $nuttx_hash / nuttx-apps @ $apps_hash ;

pushd nuttx ; git reset --hard $nuttx_hash ; popd ;

pushd apps ; git reset --hard $apps_hash ; popd ;

pushd nuttx ; echo NuttX Source: https://github.com/apache/nuttx/tree/\$(git rev-parse HEAD) ; popd ;

pushd apps ; echo NuttX Apps: https://github.com/apache/nuttx-apps/tree/\$(git rev-parse HEAD) ; popd ;

sleep 10 ;

cd nuttx/tools/ci ;

( sleep 3600 ; echo Killing pytest after timeout... ; pkill -f pytest )&

(

(./cibuild.sh -c -A -N -R testlist/$job.dat) || (res=\$? ; echo '***** JOB FAILED' ; exit \$res)

)

"(More about Docker Builds for NuttX)

Everything above becomes our Git Bisect Script that assesses “Goodness” vs “Badness” for a NuttX Commit: run-job-bisect.sh

## This script will be called by Git Bisect Wrapper...

## We run the CI Job in Docker Container

## (Copy from above)

sudo docker run -it ...

## Result the result to the caller

res=$?

if [[ "$res" == "0" ]]; then

exit 0 ## Return OK

else

exit 1 ## Return Error

fi

## Beware: Don't return Exit Codes above 124!

## https://git-scm.com/docs/git-bisect#_bisect_runWhich is called by our Git Bisect Wrapper: start-job-bisect.sh

## This wrapper script will be called by Git Bisect

## Must be run as `sudo`! (Because of Docker)

## Get the NuttX Hash (Commit ID)

nuttx_hash=$(git rev-parse HEAD)

## Run the CI Job for the NuttX Commit

## Passing the Job Name, NuttX Hash and Apps Hash

## (Or set Apps Hash to HEAD for the Latest Commit)

job=risc-v-05

apps_hash=1c7a7f7

$HOME/nuttx-bisect/run-job-bisect.sh \

$job $nuttx_hash $apps_hash

## This Git Bisect script will work for any CI Job!

## Just change `job=risc-v-05` to the CI Job Name (like arm-01)We’re ready to run this!

(Which apps_hash to use? NuttX Build History might help)

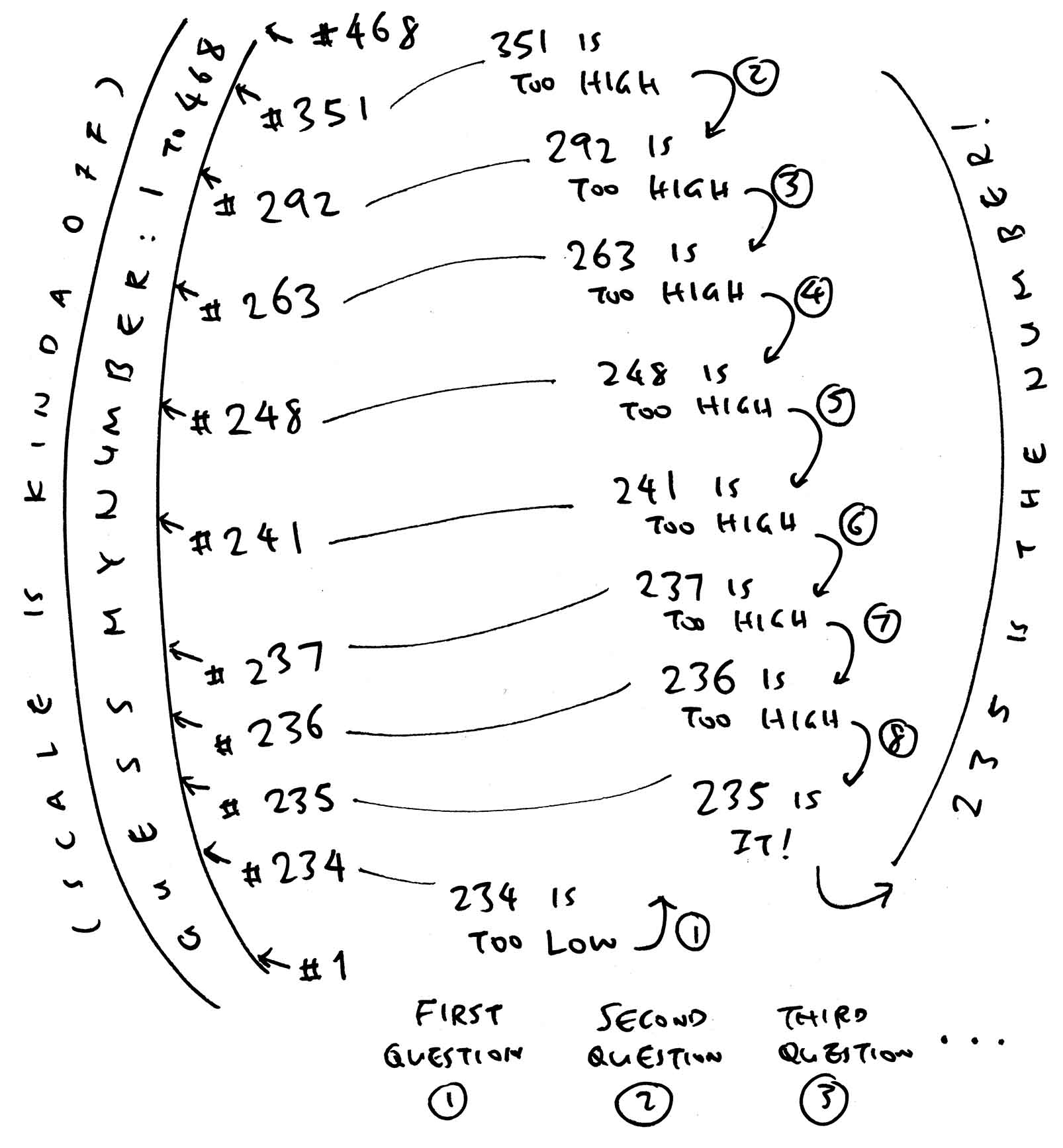

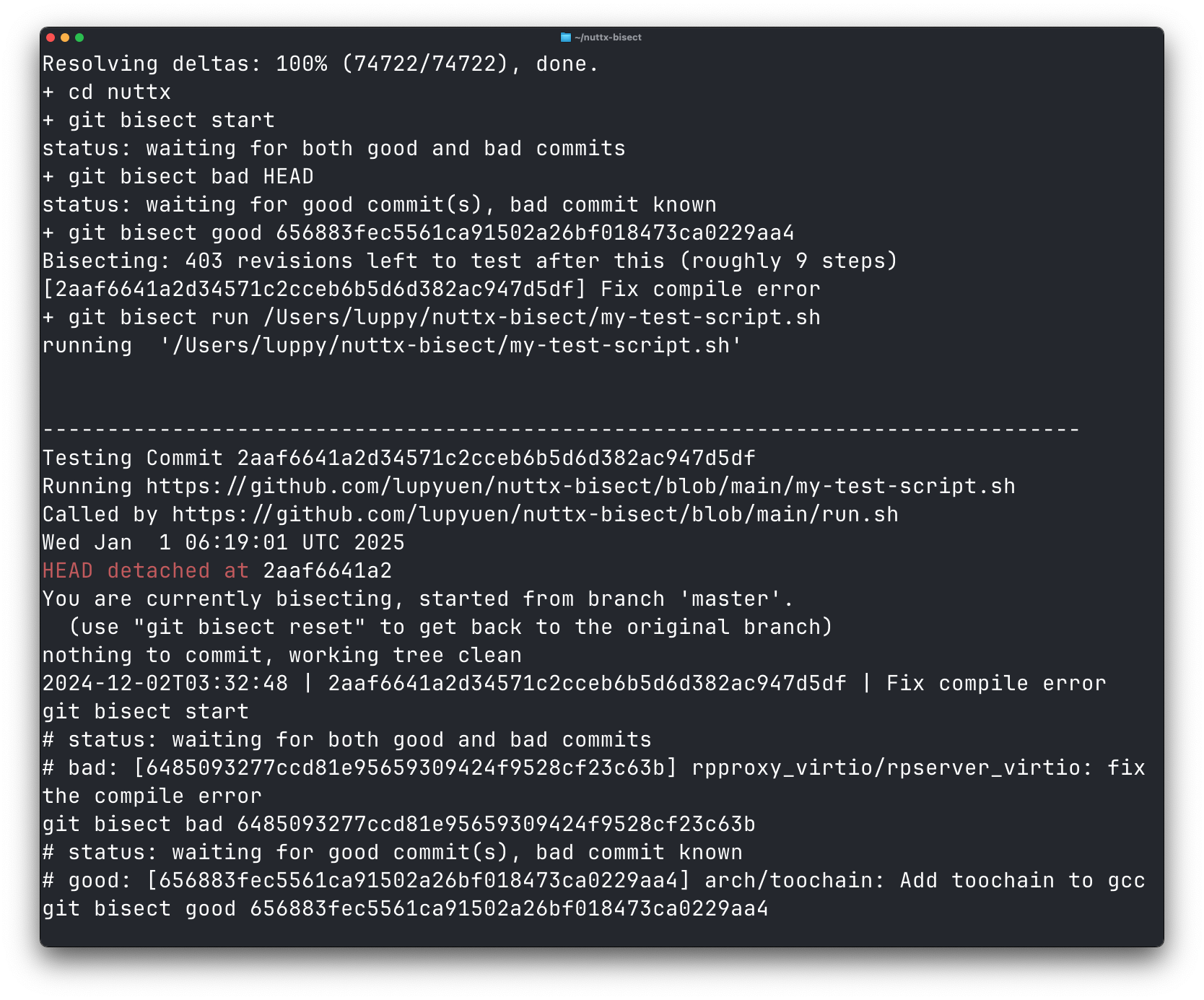

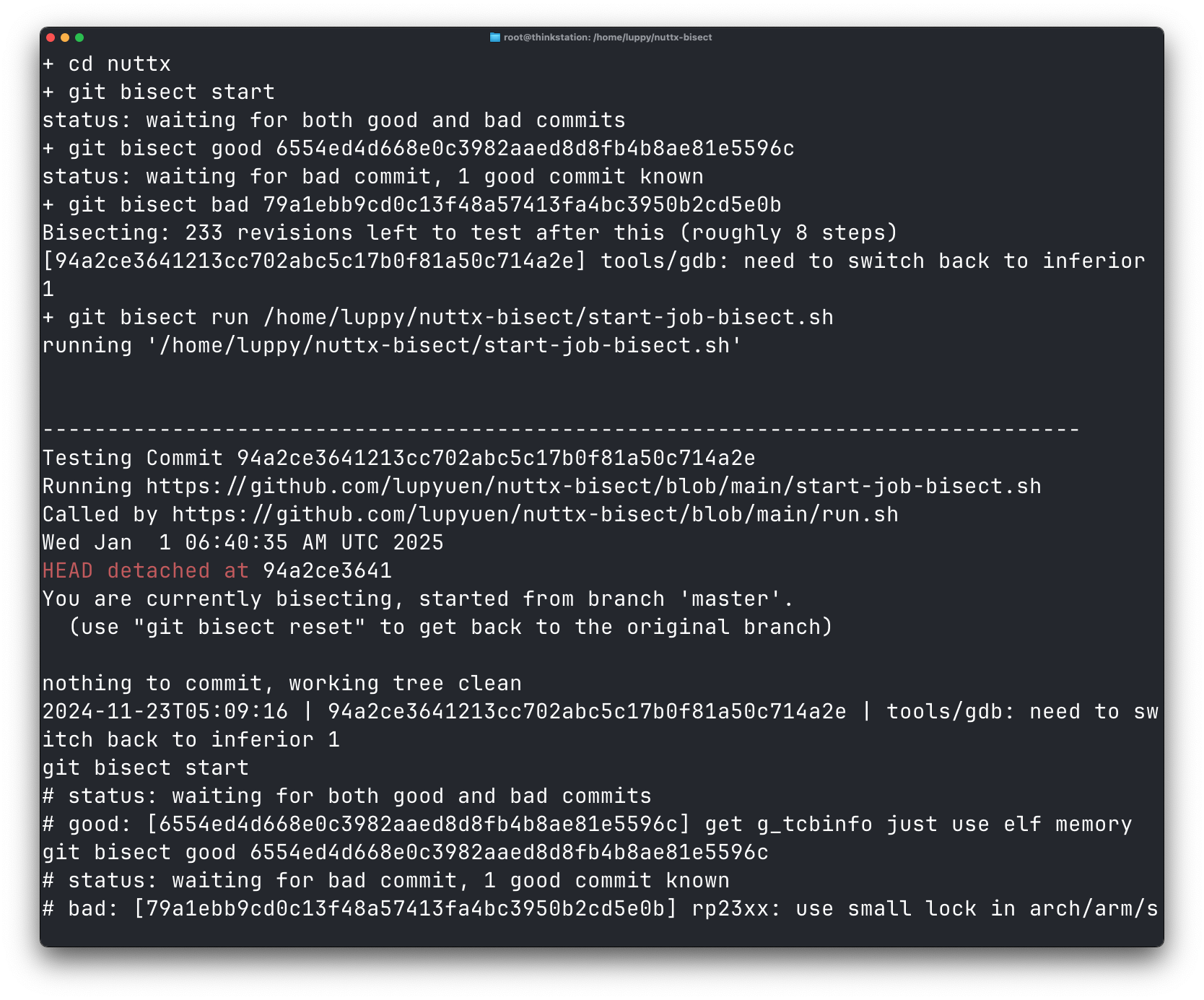

What happens in Git Bisect?

We start Git Bisect, telling it that Commit #1 is Good and Commit #468 is Bad: run2.sh

sudo --shell ## Needed by Docker

git clone https://github.com/apache/nuttx

cd nuttx

git bisect start

git bisect good 6554ed4 ## Commit #1

git bisect bad 79a1ebb ## Commit #468

git bisect run \

$HOME/nuttx-bisect/start-job-bisect.shGit Bisect picks the Middle Commit #234 and runs our script

## Testing Commit #234 (94a2ce3)

## For CI Job risc-v-05 (CI Test for RISC-V QEMU)

## With NuttX Apps (1c7a7f7)

$HOME/nuttx-bisect/run-job-bisect.sh \

risc-v-05 \

94a2ce3 \

1c7a7f7And discovers that Commit #234 is Good (via our script)

## Commit #234: Completed CI Test successfully

Configuration/Tool: rv-virt/citest

test_ostest PASSED

exit 0Beware: Every Bisect will be Super Slow! Whenever something fails: CI Test will hang the CI Job.

(Our script will kill CI Test after 1 hour)

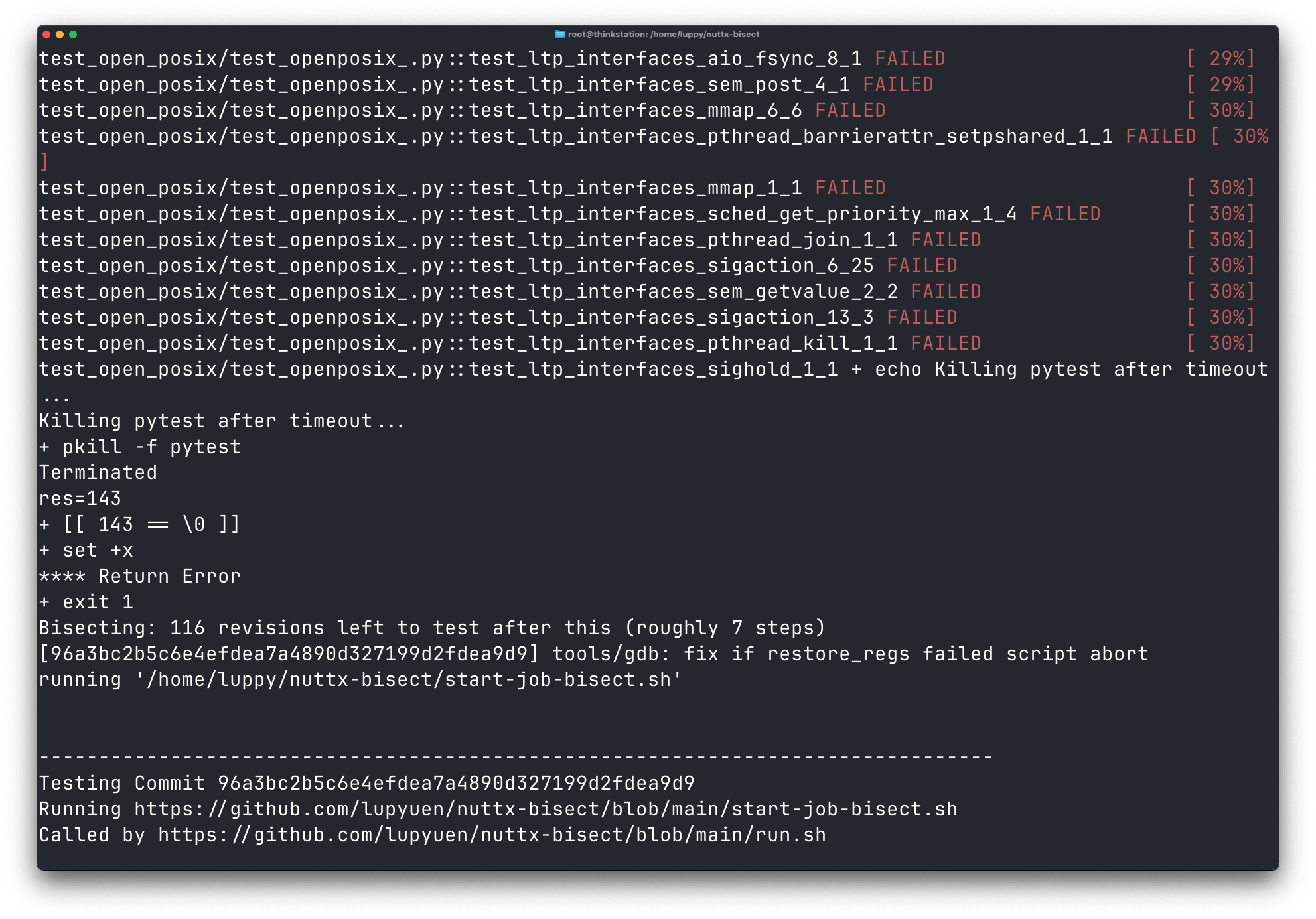

Then it continues bisecting. Assessing Commits #351, #292, #263, #248, #241, #237, #236, #235

## Commit #351 is Bad

run-job-bisect.sh ... 1cfaff0 ...

test_ltp_interfaces_pthread_barrierattr_init_2_1 FAILED

exit 1

## Commit #292 is Bad

run-job-bisect.sh ... 65a93e9 ...

test_ltp_interfaces_pthread_barrierattr_init_2_1 FAILED

exit 1

## Commit #263 is Bad

run-job-bisect.sh ... 1e265af ...

test_ltp_interfaces_sigrelse_1_1 FAILED

exit 1

## Commit #248 is Bad

run-job-bisect.sh ... c70f3e3 ...

test_ltp_interfaces_pthread_barrierattr_init_2_1 FAILED

exit 1

## Commit #241 is Bad

run-job-bisect.sh ... 5d86bee ...

test_ltp_interfaces_mq_open_7_3 FAILED

exit 1

## Commit #237 is Bad

run-job-bisect.sh ... e7c2e7c ...

test_ltp_interfaces_sigaction_23_7 FAILED

exit 1

## Commit #236 is Bad

run-job-bisect.sh ... 68d47ee ...

test_ltp_interfaces_pthread_getcpuclockid_1_1 FAILED

exit 1

## Commit #235 is Bad

run-job-bisect.sh ... 74bac56 ...

test_ltp_interfaces_pthread_detach_1_1 FAILED

exit 1Which says in 9 steps (pic below)

| Commit #468 | Is Bad |

Commit #234 | Is Good |

Commit #351 | Is Bad |

Commit #292 | Is Bad |

Commit #263 | Is Bad |

Commit #248 | Is Bad |

Commit #241 | Is Bad |

Commit #237 | Is Bad |

Commit #236 | Is Bad |

Commit #235 | Is Bad (really really) |

Finally deducing that Commit #235 is the Breaking Commit (pic below)

## Commit #235 is the Breaking Commit

74bac56 is the first bad commit

How long did Git Bisect run?

8 Hours! I left it simmering overnight. (Just like my Six-Bean Stew)

Remember that Every Bisect takes 1 Hour to Recompile + Rerun, because of the Stuck CI Test. That’s why Git Bisect works especially well for slower jobs.

Did Git Bisect find the correct Breaking Commit?

To be really really sure: We run Git Bisect one more time…

## Restart the Git Bisect (`sudo` is needed by Docker)

sudo --shell

git bisect start

git bisect good 6554ed4 ## Commit #1

git bisect bad 79a1ebb ## Commit #468

git bisect run \

$HOME/nuttx-bisect/start-job-bisect.shHere comes the twist, watch carefully…

## Commit #234 is Bad

run-job-bisect.sh ... 94a2ce3 ...

test_ltp_interfaces_mq_close_3_2 FAILED

exit 1

## Commit #117 is Good

run-job-bisect.sh ... 96a3bc2 ...

test_ostest PASSED

exit 0

## Commit #138 is Bad

run-job-bisect.sh ... 3a46b6e ...

test_ltp_interfaces_pthread_barrierattr_init_2_1 FAILED

exit 1

## Commit #146 is Bad

run-job-bisect.sh ... dac3f31 ...

test_ltp_interfaces_sigaction_6_3 FAILED

exit 1

## Commit #131 is Good

run-job-bisect.sh ... 4c3ae2e ...

test_ostest PASSED

exit 0

## Commit #138 is Bad

run-job-bisect.sh ... 3b50bf1 ...

test_ltp_interfaces_pthread_barrierattr_init_2_1 FAILED

exit 1

## Commit #134 is Bad

run-job-bisect.sh ... 5ff98f6 ...

test_ltp_interfaces_pthread_barrierattr_init_2_1 FAILED

exit 1

## Commit #133 is Bad

run-job-bisect.sh ... b4d8ac8 ...

test_ltp_interfaces_pthread_barrierattr_init_2_1 FAILED

exit 1

## Commit #132 is Bad

run-job-bisect.sh ... fb92b60 ...

test_ltp_interfaces_pthread_barrierattr_init_2_1 FAILED

exit 1Which deduces…

## Commit #132 is the Breaking Commit

fb92b60 is the first bad commit

## Previously: Commit #235 is the Breaking Commit!

## 74bac56 is the first bad commitCommit #132 is now the Breaking Commit, not Commit #235!

Hmmm something has changed. Why?

| Commit #468 | Is Bad |

Commit #234 | Is Bad |

Commit #117 | Is Good |

Commit #138 | Is Bad |

Commit #146 | Is Bad |

Commit #131 | Is Good |

Commit #138 | Is Bad |

Commit #134 | Is Bad |

Commit #133 | Is Bad |

Commit #132 | Is Bad |

Commit #132 | Is the Breaking Commit (really really?) |

Why is Git Bisect telling us a different Breaking Commit?

In The Movies: Arnold travels to the past (in a Time Machine), changing something in history, and the future changes.

In Real Life: Commit #234 has changed in history. Altering our future! (Pic above)

## Previously...

## Commit #234 is Good

run-job-bisect.sh ... 94a2ce3 ...

test_ostest PASSED

exit 0

## But Now...

## Commit #234 is Bad

run-job-bisect.sh ... 94a2ce3 ...

test_ltp_interfaces_mq_close_3_2 FAILED

exit 1After this, everything changed. Concluding with a Different Breaking Commit. (Think “alternate timeline”)

Huh! How did Commit #234 change?

This CI Test looks more complicated than we thought. CI Test appears to be failing with the slightest change in QEMU Memory. For a Specific Commit: Our bug isn’t reliably reproducible.

Lesson Learnt: Git Bisect works best for bugs that are reliably reproducible for a specified commit!

Can we use Git Bisect with Real Hardware? On an Actual NuttX Device?

Yep sure Git Bisect will work with any NuttX Device that can be controlled by a script.

For Example: SG2000 RISC-V SBC has a script for Building NuttX and Booting via TFTP (which can be controlled by Git Bisect)

Though Honestly: SG2000 Emulator would be much quicker (and more reliable) for Git Bisect…

“RISC-V Emulator for Sophgo SG2000 SoC (Pine64 Oz64 / Milk-V Duo S)”

(No script? Try Manual Git Bisect)

OK so Git Bisect wasn’t 100% successful. How did we fix the bug?

Actually we have Two Bugs stacked together…

The First Bug was a Stack Overflow that Tiago Medicci Serrano kindly fixed by increasing the Init Task Stack Size…

The Second Bug? Not so obvious which Stack Overflowed…

## Start the NuttX Docker Image

sudo docker run \

-it \

ghcr.io/apache/nuttx/apache-nuttx-ci-linux:latest \

/bin/bash

## Run the CI Test in Docker

cd

git clone https://github.com/apache/nuttx

git clone https://github.com/apache/nuttx-apps apps

pushd nuttx ; echo NuttX Source: https://github.com/apache/nuttx/tree/$(git rev-parse HEAD) ; popd

pushd apps ; echo NuttX Apps: https://github.com/apache/nuttx-apps/tree/$(git rev-parse HEAD) ; popd

cd nuttx/tools/ci

./cibuild.sh -c -A -N -R testlist/risc-v-05.dat

## Wait for it to fail

## Press Ctrl-C a few times to stop it

## Then dump the log

cat /root/nuttx/boards/risc-v/qemu-rv/rv-virt/configs/citest/logs/rv-virt/qemu/*

## Output shows: Stack Overflow for ltp_interfaces_pthread_barrierattr_init_2_1

nsh> ltp_interfaces_pthread_barrierattr_init_2_1

riscv_exception: EXCEPTION: Load access fault. MCAUSE: 00000005, EPC: 800074c6, MTVAL: 000002a4

STACKSIZE USED FILLED COMMAND

3936 3936 100.0%! ltp_interfaces_pthread_barriera

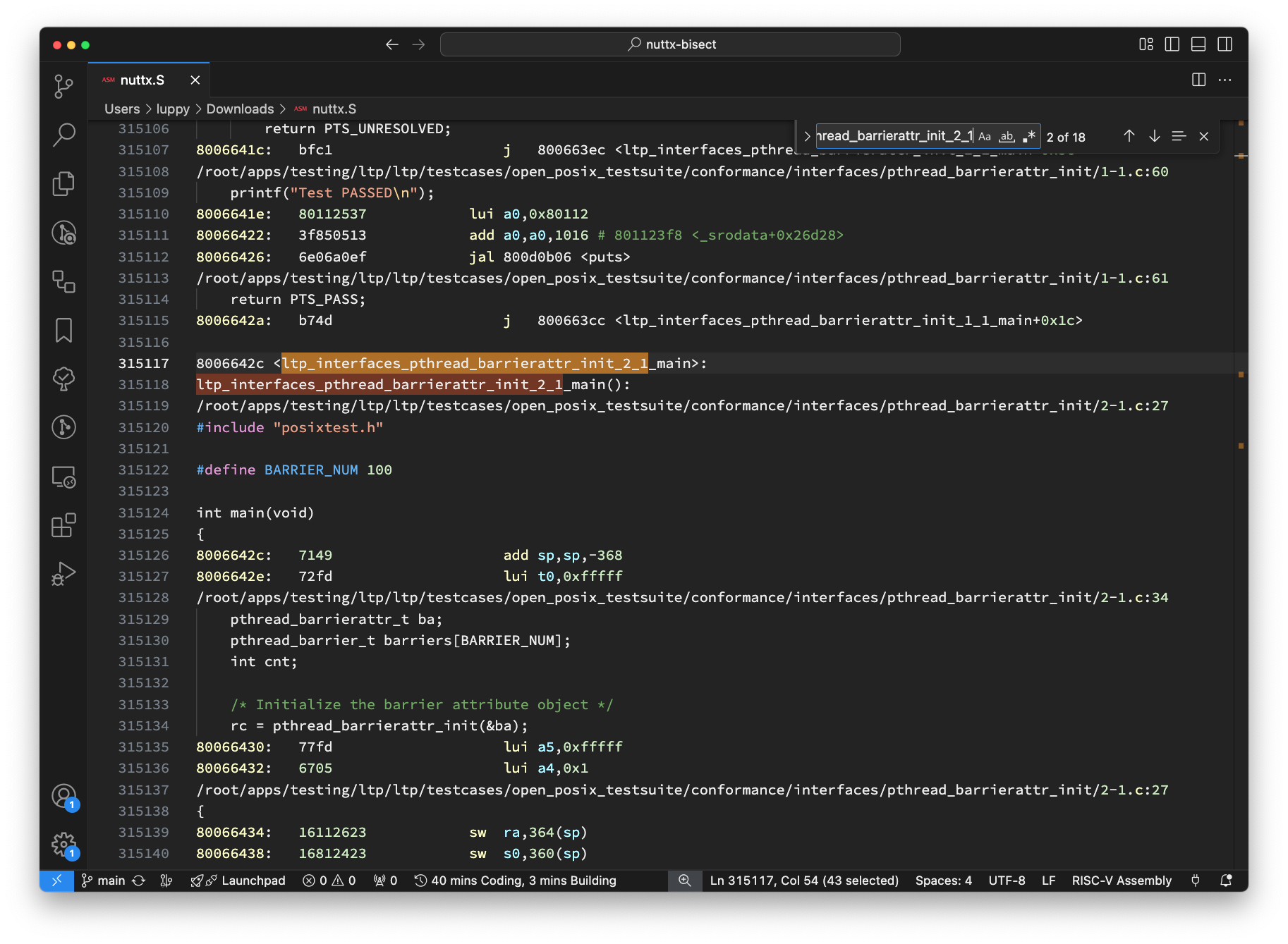

What’s ltp_interfaces_pthread_barrierattr_init_2_1? Why is the Stack Overflowing?

We search the NuttX Disassembly (pic above)

## Dump the disassembly to nuttx.S

cd /root/nuttx

riscv-none-elf-objdump \

--syms --source --reloc --demangle --line-numbers --wide \

--debugging \

nuttx \

>nuttx.S \

2>&1

## Search the disassembly for ltp_interfaces_pthread_barrierattr_init_2_1

grep nuttx.S \

ltp_interfaces_pthread_barrierattr_init_2_1And we see…

8006642c <ltp_interfaces_pthread_barrierattr_init_2_1_main>:

ltp_interfaces_pthread_barrierattr_init_2_1_main():

apps/testing/ltp/ltp/testcases/open_posix_testsuite/conformance/interfaces/pthread_barrierattr_initAha! It points to a NuttX Test App: nuttx-apps/testing/ltp

Thus we edit the Stack Configuration: testing/ltp/Kconfig

## Before: Stack Size is 4 KB

config TESTING_LTP_STACKSIZE

int "Linux Test Project stack size"

default 4096And we double the Stack Size to 8 KB…

## After: Stack Size is 8 KB

default 8192We retest in Docker. And our CI Test succeeds yay!

But why did we run out of Stack Space? Has something grown too big?

We could run Bloaty to do detailed analysis of the Code and Data Size…

Next Article: What would NuttX Life be like without GitHub? We try out (self-hosted open-source) Forgejo Git Forge with NuttX.

After That: Why Sync-Build-Ingest is super important for NuttX CI. And how we monitor it with our Magic Disco Light.

After After That: Since we can Rewind NuttX Builds and automatically Git Bisect… Can we create a Bot that will fetch the Failed Builds from NuttX Dashboard, identify the Breaking PR, and escalate to the right folks?

But First…

Many Thanks to the awesome NuttX Admins and NuttX Devs! And My Sponsors, for sticking with me all these years.

Got a question, comment or suggestion? Create an Issue or submit a Pull Request here…

Earlier we ran out of Stack Space. Has something grown too big?

We could run Bloaty to do detailed analysis of the Code and Data Size…

For quick experimenting: Bloaty is bundled inside our NuttX Docker Image…

## Inside NuttX Docker:

## Assume we compiled NuttX at /root/nuttx/nuttx

$ /tools/bloaty/bin/bloaty /root/nuttx/nuttx

FILE SIZE VM SIZE

-------------- --------------

46.1% 6.80Mi 0.0% 0 .debug_info

17.1% 2.53Mi 0.0% 0 .debug_line

8.6% 1.26Mi 0.0% 0 .debug_abbrev

6.6% 1000Ki 0.0% 0 .debug_loclists

6.2% 941Ki 64.9% 941Ki .text

5.1% 772Ki 0.0% 0 .debug_str

2.5% 381Ki 26.3% 381Ki .rodata

1.8% 277Ki 0.0% 0 .debug_frame

1.7% 254Ki 0.0% 0 .symtab

1.2% 174Ki 0.0% 0 .strtab

1.1% 166Ki 0.0% 0 .debug_rnglists

1.1% 164Ki 0.0% 0 .debug_line_str

0.0% 0 8.1% 118Ki .bss

0.8% 114Ki 0.0% 0 .debug_aranges

0.1% 8.31Ki 0.6% 8.27Ki .data

0.0% 5.00Ki 0.1% 858 [104 Others]

0.0% 3.89Ki 0.0% 0 [Unmapped]

0.0% 2.97Ki 0.0% 0 .shstrtab

0.0% 296 0.0% 256 .srodata.cst8

0.0% 196 0.0% 0 [ELF Headers]

0.0% 144 0.0% 104 .sdata.called

100.0% 14.8Mi 100.0% 1.42Mi TOTAL

## Let's dump the details

## For NuttX QEMU RISC-V

$ /tools/bloaty/bin/bloaty \

/root/nuttx/nuttx \

-d compileunits

bloaty: Unknown ELF machine value: 243

## Oops Bloaty won't work for RISC-V Executable!Standard Bloaty won’t support RISC-V. But Fuchsia Bloaty supports it.

We compile and run Fuchsia Bloaty…

## Compile Fuchsia Bloaty for RISC-V Support

git clone https://fuchsia.googlesource.com/third_party/bloaty

cd bloaty

cmake -B build -G Ninja -S .

cmake --build build

## Run Fuchsia Bloaty on NuttX QEMU RISC-V

## Dump all the details

cd /root/nuttx

/root/bloaty/build/bloaty nuttx \

-d compileunits,segments,sections,symbolsNow we see everything in our NuttX RISC-V Executable…

FILE SIZE VM SIZE

-------------- --------------

62.7% 9.26Mi 66.2% 960Ki [2505 Others]

7.5% 1.11Mi 4.8% 69.2Ki EEE6secondB8un170006Ev

94.1% 1.04Mi 0.0% 0 [Unmapped]

55.6% 594Ki NAN% 0 .debug_info

21.3% 227Ki NAN% 0 .debug_line

17.4% 185Ki NAN% 0 .debug_str

3.1% 33.7Ki NAN% 0 .strtab

54.6% 18.4Ki NAN% 0 [160 Others]

7.8% 2.61Ki NAN% 0 std::__1::(anonymous namespace)::make<>()::buf

6.5% 2.20Ki NAN% 0 std::__1::num_get<>::do_get()

4.5% 1.52Ki NAN% 0 std::__1::num_put<>::do_put()